When Humans and Other Animals Behave Irrationally

When Humans and Other Animals Behave Irrationally

Thomas R. Zentall

University of Kentucky

Reading Options:

Continue reading below, or:

Read/Download PDF | Add to Endnote

Abstract

The field of comparative cognition has been largely concerned with the degree to which animals have analogs of the cognitive capacities of humans (e.g., imitation, categorization), but recently attention has been directed to behavior that is judged to be biased or suboptimal. We and some of our colleagues have studied several of these and have found that pigeons too show similar paradoxical behaviors. In the present review I will discuss three of these behaviors: sunk cost, justification of effort, and unskilled gambling. Sunk cost is the tendency to decide to spend more on a losing project because of the amount already invested. Pigeons show similar effects even when there is no ambiguity about the results of continuing versus changing alternatives. Justification of effort is the added value one often gives to a reward based on the effort exerted to obtain it. Pigeons too prefer stimuli that signal outcomes that they have had to work harder to obtain. Humans engage in unskilled gambling, like lotteries and slot machines, in which the return is typically less than the investment. And pigeons show a similar tendency to choose a low-probability, high-payoff alternative (gamble) over a more optimal, high-probability, low-payoff alternative. The fact that animals such as pigeons show behavior thought to be unique to humans suggests that the basis for such behaviors is not likely to result from culture or social mechanisms and may have basic behavioral origins.

Keywords: Sunk cost, justification of effort, gambling, contrast, pigeons

Author Note: Thomas R. Zentall, Department of Psychology, University of Kentucky, Lexington, Kentucky 40506-0044. Correspondence concerning this article should be addressed to Thomas R. Zentall at zentall@uky.edu.

I thank Jessica Stagner, Jennifer Laude, Tricia Clement, Rebecca Singer, Karen Roper, Cassandra Gipson, Andria Friedrich, Holly Miller, Kristina Pattison, Jerome Alessandri, Emily Klein, and Aaron Smith for their contribution to the research presented in this review.

The field of comparative cognition has devoted considerable attention to studying cognitive processes that have traditionally been viewed as uniquely within the human domain (see e.g., Wasserman & Zentall, 2006; Zentall & Wasserman, 2012). The goal has been to ask if other animals have cognitive abilities similar to those of humans. For example, will they imitate (Neilsen, Subiaul, Galef, Zentall, & Whiten, 2012), can they demonstrate transitive inference (McGonigle & Chalmers, 1977), can they categorize pictures (Wasserman, Brooks, & McMurray, 2015), do they have episodic memory (Zhou, Hohmann, & Crystal, 2012)? In each case putative evidence for such ability exists, and such results suggest either that other animals have similar cognitive abilities or possibly that the basis for these abilities has been attributed to higher cognitive abilities than is necessary. If higher cognitive abilities are not needed, the comparative research has implications for human behavior because it suggests that higher cognitive abilities may not be needed in humans either.

Comparative cognition research has devoted less attention to anomalies and contradictions in human behavior, such as the logically inconsistent behavior studied by Kahneman and Tversky (1979), Kahneman (2013), and Ariely (2010). When humans make decisions that might be called irrational or are inconsistent with optimal choice (choice that would maximize reinforcement), it may be even more important to understand the behavioral mechanisms involved because they have implications for the possibility of bringing them under behavioral control (Staw & Ross, 1978).

In the present paper we explore three examples of bias or suboptimal choice that many would be surprised to find in humans and other animals but which appear to be generally present. The first of these examples is sunk cost, in which the degree of prior investment affects the decision whether to continue “throwing good money after bad.” The second is cognitive dissonance or justification of effort, in which the effort expended in obtaining a reinforcer (or signal for reinforcement) affects the value of the reinforcer or signal. The third is the tendency to risk choosing a low probability of a high payoff over a more optimal high probability of a low payoff, an analog of human gambling behavior. In the first two cases, subjects give value to the effort given prior to the choice, but logically that effort should play no role in the decision. In the last case, subjects fail to give appropriate value to predictable losses. In all three cases, subjects act suboptimally.

The Sunk Cost Fallacy

One example of suboptimal behavior is commonly referred to as the sunk cost fallacy. A sunk cost is a cost that has already been incurred and cannot be recovered but is allowed to affect one’s future behavior. An example might be the following scenario: one goes to a movie and after half an hour decides it is quite dreadful, but one has paid good money to see it so one sits it out to the end. Not only does one not enjoy the rest of the movie, but one could have done something else more enjoyable with the time. The reason often given for this behavior is that leaving the theater would be a waste of the money spent on the ticket and we have been taught not to waste.

Another example of a sunk cost effect was in an experiment reported by Arkes and Blumer (1985). In this scenario one buys a ticket for a weekend ski trip to Michigan for $100. Later one buys a ticket for a weekend ski trip to Wisconsin for $50. One thinks that one will enjoy the ski trip to Wisconsin more than the Michigan ski trip. After purchasing the second ticket, one notices that the two ski trips are for the same weekend. Neither ticket is refundable, and it is too late to sell either. One must use a single ticket and not the other. Which ski trip shall one take? Although the rational decision would be to choose the Wisconsin ski trip, Arkes and Blumer found that only 46% of the subjects said they would choose that one.

At a more consequential level, some people continue to invest in a failing business because they have already invested so much in the business and the invested amount would be wasted if they gave up on it. Similarly, people may argue that they remain in a failing romantic relationship because they have invested too much in the relationship to leave.

Perhaps the most famous example of a response to sunk cost is the case of the development of the well-known Concorde plane. The supersonic aircraft was a joint project of the French and British governments. Long after it became clear that the project would generate little return on the investment, the project was continued because too much had been invested in it to quit (of course economic return may not be the only reason for continuing; see Arkes & Ayton, 1999).

Interestingly, people sometimes count on sunk cost to force themselves to continue behavior that they may find onerous. For example, people may buy a one-year gym membership because if they feel like quitting after a few visits to the gym, the investment already made may convince them to keep going.

Sunk cost also has been studied experimentally. For example, Arkes and Blumer (1985) found, paradoxically, that subjects who purchased season tickets to the theater at full price attended more plays than those who were willing to purchase tickets at full price but were offered the season tickets at half price. Apparently, the loss of the value of a full-price ticket was more aversive than the loss of the value of a half-price ticket, but of course in either case the cost of the ticket was already lost whether they attended or not. When people demonstrate the sunk cost fallacy (sometimes referred to as an escalation of commitment, McAfee, Mialon, & Mialon, 2010), they often increase their future investment in proportion to the amount already invested (Arkes & Blumer, 1985; Khan, Salter, & Sharp, 2000; Staw, 1976, 1981).

Although the sunk cost fallacy is so named because it is thought to result in suboptimal outcomes, some have argued that it may not always be evidence of irrational choices (McAfee et al., 2010). Sometimes, the greater the investment, the more likely the chance of success. In that case, the greater the investment already made, the closer one should be coming to success. Thus, it may be rational to take into account the size of the sunk investment when deciding whether to invest further because the effect of sunk costs on the willingness to make continued investments is ambiguous and whether one continues to invest will hinge on the function that predicts the rate of investment that will lead to success (sometimes referred to as the derivative of the hazard rate). McAfee et al. suggest that whether to invest more in a project may depend not only on the rate of progress made so far but also on whether the hazard function is increasing or decreasing. If progress is improving, added investment may be warranted, whereas if progress is declining, added investment may not be warranted.

Another important factor in deciding whether to continue is consideration of the alternative. Consider the following: A company is in decline, but it decides to embark on a high-risk strategy. Halfway through the new project there is evidence that the new strategy is not working. Should the company persist or go back to its former strategy? One could argue that it might be better to continue with the high-risk strategy because the alternative might be even worse. On the other hand, although the alternative of returning to the previous strategy might be a better investment, the fact that significant resources have been expended on the current strategy might mean there are insufficient funds to return to the earlier one.

McAfee et al. (2010) note another factor that may contribute to consideration of sunk cost: reputation. In the example given earlier about the Concorde, certainly the reputation of the French and British governments played an important role in their decision not to terminate the project. Termination would have had important consequences, not only of a financial nature but also concerning whether these two governments could be counted on to follow through on their commitments. Consider the impact that termination might have had on the decision to form a European trade agreement that eventually led to the European Economic Community and later to the Eurozone.

Finally, because generally there is some uncertainty about the future, giving up may be followed by a sense of regret. Would persistence have led to success? There may be some satisfaction in continuing until there is clear resolution, even if that resolution is failure. As consideration of sunk cost appears to be based on the human characteristic of regret, an overgeneralization of a “don’t waste” rule, and maintaining one’s reputation, it would be instructive to ask whether animals too consider sunk costs in deciding to persist with an initial activity. If one can find evidence for a sunk cost effect in animals, it would suggest that complex factors may reinforce the behavior in humans but may not be responsible for it.

Sunk Cost Research With Animals

Arkes and Ayton (1999) have argued that sunk cost effects are a uniquely human phenomenon because what has been offered as evidence for such effects in other animals can be attributed to simpler explanations. For example, animals engaged in a fixed action pattern may not be sensitive to the fact that they are not successful at arriving at a goal. They will continue to expend resources toward that goal irrespective of continued failure (Dawkins & Brockmann, 1980).

More recent research suggests, however, that sunk cost effects can be found in pigeons (Navarro & Fantino, 2005). In a cleverly designed experiment, Navarro and Fantino trained pigeons to peck at a light to receive rewards. For 50% of the trials, 10 pecks (FR10) were required for reinforcement, for 25% of the trials, 40 pecks were required (FR40) and for 12.5% of the trials, either 80 pecks (FR80) or 160 pecks (FR160) were required. However, at any time the pigeons could peck a second key to start a new trial after a 1-s delay. With these contingencies in mind, the ideal strategy would be to peck the reward key 10 times, and if reinforcement was not forthcoming, to start a new trial. When a change in key light signaled that one of the longer schedules was in effect, the pigeons started a new trial efficiently. However, when no change in key light was provided, three of the four pigeons completed the high FR trials and did not peck the other key to start the next trial (see also Macaskill & Hackenberg, 2012; Magalhães & White, 2014).

Although these experiments suggest that pigeons tend to persist with the current schedule longer than they should, there are several factors that should be considered before concluding that their decision is irrational. First, the decision to start a new trial after 10 pecks would require that the pigeons are able to count to 10, but there is no evidence that pigeons can do this. Instead, the pigeons must determine that 10 or more pecks have already been made. Given that the consequences of making fewer than 10 pecks and starting the next trial prematurely would be much worse than making a few extra pecks, one would expect that pigeons would tend to err in the direction of making too many pecks (i.e., being certain that no fewer than 10 pecks had been made). Although it is difficult to estimate how many pecks that would be, it would certainly be greater than 10. Furthermore, once the pigeon was certain that it had surpassed 10 pecks, it would also be difficult for the pigeon to estimate the number of remaining pecks that would be required to reach 40. Of course, starting a new trial would mean making only 10 more pecks and that should be discriminable from the number of pecks required to reach 40, but three other factors should be taken into account. First, starting a new trial incurs a delay that requires moving away from the reinforcement key to the new-trial key, a key that never provides reinforcement. Second, pecking the new-trial key results in a 1-s blackout that is likely to be more aversive than the 1-s delay of reinforcement. Finally, the delay of reinforcement associated with staying with the reinforcement key or starting a new trial was probabilistic. Starting a new trial would only provide reinforcement sooner 50% of the time. Half of the time, the delay would be even longer.

In the real world in which human sunk cost effects are found, outcomes are likely to be probabilistic, as well. Furthermore, those probabilities may be difficult to calculate. A financial advisor might say that the probability of success of a new business is small but that that is a best guess without taking into consideration several unknowns. In the pigeon experiments, the fixed probability of each of the outcomes makes the contingencies more certain, but it does not eliminate the probabilistic nature of the consequences of the decision to stay with the current uncertain fixed ratio or start a new trial in which the fixed ratio is also uncertain. That uncertainty may bias the animal to stay with the current trial rather than advance to the next trial.

Although the uncertainty of staying versus starting the next trial comes closer to the choices that humans make in the real world, we were interested in whether pigeons would show a sunk cost effect when there was little uncertainly in the consequences of staying versus switching. The question we asked was whether a pigeon would show a sunk cost effect by continuing to complete a known fixed ratio of responding rather than switch to a smaller fixed ratio. That is, would a pigeon consider the current investment (number of pecks already made) in making a decision to either continue to complete the number of pecks required or switch to a different alternative and make fewer required pecks to reinforcement.

In our first experiment (Pattison, Zentall, & Watanabe, 2012), we trained pigeons first to peck a red stimulus 30 times for reinforcement and a green stimulus 15 times for reinforcement. We then trained the pigeons to start pecking the red stimulus on the center key for a number of pecks (5, 10, 15, 20, or 25) and then turned off the center key and turned on one of side keys. The side key was either red, in which case it had to complete the number of pecks to red (25, 20, 15, 10 or 5, respectively) for reinforcement, or green, in which case it had to peck the green key always 15 times, independently of how many pecks it had already made to the red center key. Thus, on some trials they had to complete the pecks to red. On other trials they had to peck green 15 times. After several sessions of training the pigeons with forced trials to experience the consequences of continuing to peck red or switching to green, we gave the pigeons choice trials and found that they had a significant bias to complete the number of pecks to the red key.

To test the hypothesis that the pigeons preferred the variable pecks to the red stimulus over the fixed pecks to the green stimulus, independent of the number of pecks invested, in Experiment 2, we tested the pigeons with the same choice but without an initial investment. Had they learned the rule that a total of 30 pecks would be required to red, trials with no initial investment would have implied that 30 pecks would be required to the red side key, whereas only 15 pecks would be required to the green key. On the other hand, if red was preferred merely because of the variable number of pecks required to the red key following the initial investment, the red key should still be preferred. On these test trials we found that the pigeons had a clear preference for the green key. Thus, it was not just an attraction to the variable number of pecks required to the red key that determined the pigeons’ preference in Experiment 1.

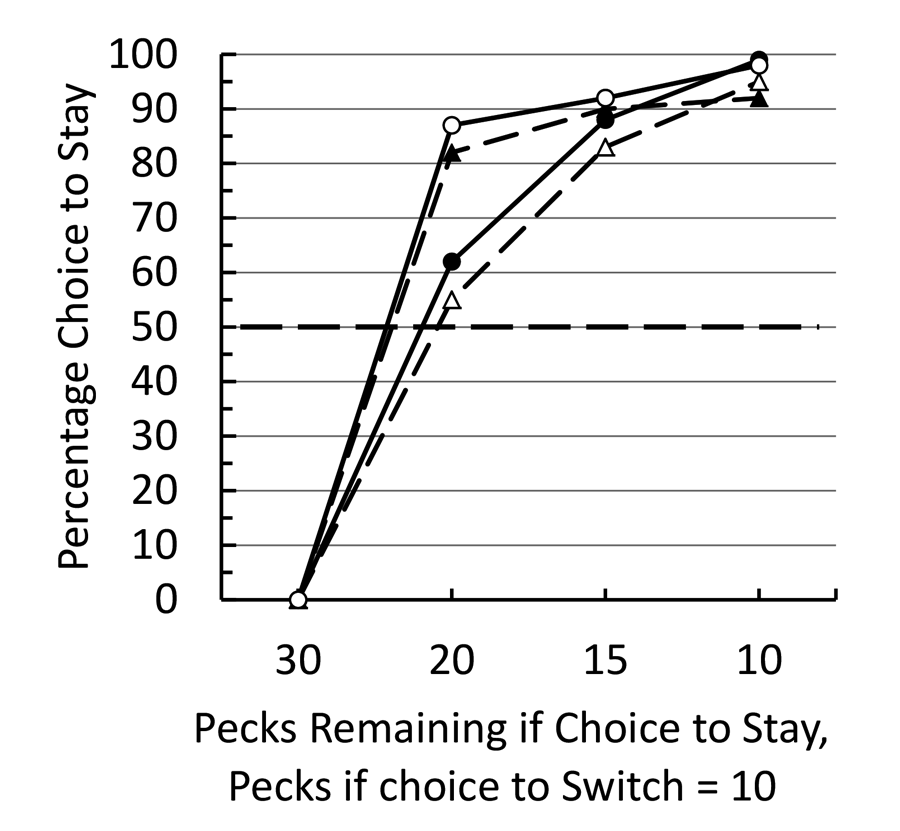

In our third experiment (Pattison et al., 2012), we modified the procedure such that the initial stimulus appeared on one of the side keys. After the initial investment (5 to 25 pecks) the initial stimulus went off and the center key was turned on (white). A single peck to the white center key turned on the two side keys. Returning to the original colored side key required the pigeon to complete remaining number of pecks (25 to 5, respectively), whereas if the pigeon switched to the low fixed ratio color it required only 10 pecks for reinforcement. In this case we found an even stronger sunk cost effect (see Figure 1). In all cases of prior investment, we found a preference to complete the 30 pecks required for reinforcement rather than switch to the fixed ratio 10. We attributed the stronger effect in Experiment 3 to the fact that the initial investment and choice to complete the 30 pecks occurred at the same spatial location and there is evidence that there is considerable generalization decrement when pigeons match the same color on two different keys (Lionello-DeNolf & Urcuioli, 2000).

Figure 1. After training pigeons to peck green 30 times or red 10 times for food, they were trained to peck green a variable number of times and could choose between completing the remaining pecks to green or pecking red 10 times. The data are presented for each of the four pigeons.

Figure 1. After training pigeons to peck green 30 times or red 10 times for food, they were trained to peck green a variable number of times and could choose between completing the remaining pecks to green or pecking red 10 times. The data are presented for each of the four pigeons.

Gibbon and Church (1981) found a similar phenomenon using time rather than number of pecks as the initial versus constant requirement. Although that was not the purpose of their experiments, they found a bias of almost 20% to continue with the “time left” alternative when the standard time came available. Thus, when the time left equaled the standard time, pigeons preferred the time left about 70% of the time.

The importance of these experiments is that the tendency to stay with the stimulus of the original investment does not appear to depend on the uncertainty of the requirements for reinforcement. In all of the earlier research and in most human examples of the sunk cost effect, there is the possibility that continuation will result in a better outcome than termination. The closest example of a human sunk cost effect that does not involve probabilistic outcomes is the ski trip example described earlier. That choice involved two initial investments, one of $100 and the other of $50, the sunk cost, and the judgment that the $50 ski trip would be more enjoyable would be the value of the less expensive ski trip. However, more than half of the subjects said that they would go on the more expensive ski trip, a clear example of a sunk cost effect in which the outcomes would not be considered probabilistic.

Once a decision has been made to consider the sunk cost of one’s prior effort, humans may attempt to justify that decision by modifying their prior belief. In the above example, one might reason that although skiing in Wisconsin might be more enjoyable, the Michigan ski trip has certain advantages that one had not considered. Perhaps one has not taken into account that the Michigan ski area is easier to drive to or the amenities at the lodge might be better. Such modification of prior belief is often described as a response to cognitive dissonance, the dissonance that may result from the discrepancy between one’s behavior (going on the Michigan ski trip) and one’s belief (the Wisconsin ski trip would have been more enjoyable). This brings us to the second line of research that asks if suboptimal behavior typically thought of as unique to humans can also be found in other animals.

Cognitive Dissonance

Cognitive dissonance can be defined as the discomfort that results from the occurrence of a discrepancy between one’s beliefs and one’s behavior. To resolve that discrepancy, it is assumed that one must find a way to modify one’s beliefs. Research with humans on cognitive dissonance can be traced back to the classic experiment by Festinger and Carlsmith (1959) in which humans were asked to take part in a boring task involving turning pegs in a peg board for an hour. The subjects were then paid either $1 or $20 to tell a waiting subject that the task was interesting. But before they did, they were asked to evaluate the experiment. Results indicated that the subjects who were paid only $1 rated the dull task as more enjoyable than those who were paid $20. The results are counterintuitive. One might think that the larger reward would be associated with judging that the task was more interesting. The authors explain their findings as follows: Being paid only $1 is not sufficient incentive for lying, so those who were paid $1 experienced dissonance. They could overcome that dissonance by coming to believe that the task was more interesting. Being paid $20, however, provided sufficient reason for lying, so there was no dissonance.

Bem (1967) argued that one does not have to hypothesize that dissonance is involved in the cognitive dissonance effect reported by Festinger and Carlsmith (1959). According to Bem, people look for reasons for their behavior. Those who were paid $20 used that as the reason for being willing to lie. Those who were paid $1 reasoned that it must not be a lie. Whether animals experience dissonance of this kind or look for reasons to explain their behavior is questionable, and if one could find a similar result in other animals it would suggest that a simpler mechanism might be responsible for this curious human behavior.

Although the experimental design used by Festinger and Carlsmith (1959) is clearly too complex to translate into a task that could be used with other animals, Aronson and Mills (1959) examined a version of cognitive dissonance called justification of effort that could more easily be adapted for use with other animals. In their experiment, a group of university students who volunteered to join a discussion group on the topic of the psychology of sex were asked to read a short passage to the experimenter. Subjects in the mild-embarrassment condition were asked to read aloud a list of sex-related words, whereas those in the severe-embarrassment condition were asked to read aloud a list of highly sexual words. Control subjects were not required to read aloud. All subjects then listened to a recording of a discussion about sexual behavior in animals that was reasonably dull and unappealing. When later asked to rate the group and its members, control and mild-embarrassment groups did not differ, but the severe-embarrassment group’s ratings were significantly higher. This group, whose initiation process was more difficult, was presumed to have increased the subjective value of the discussion group, presumably to resolve the dissonance (in this case between wanting to be part of the discussion but finding it embarrassing to read the material aloud). The advantage of such a design is that it focuses on the value of an outcome as influenced by the effort (in this case embarrassment) that preceded it. Presumably, the embarrassment and the desire to join the group created dissonance that was resolved by attributing greater value to the group.

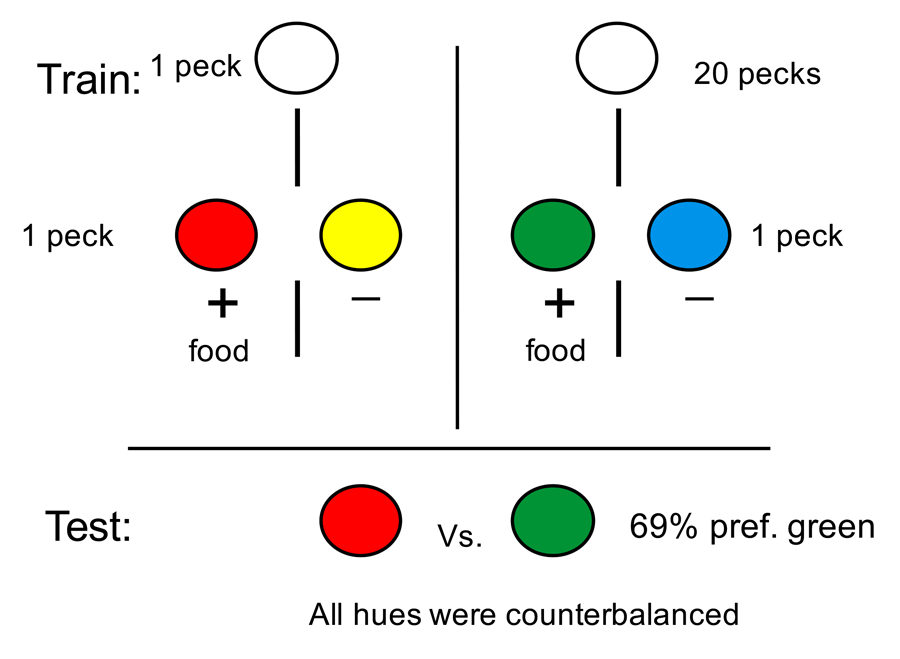

Justification of Effort With Animals

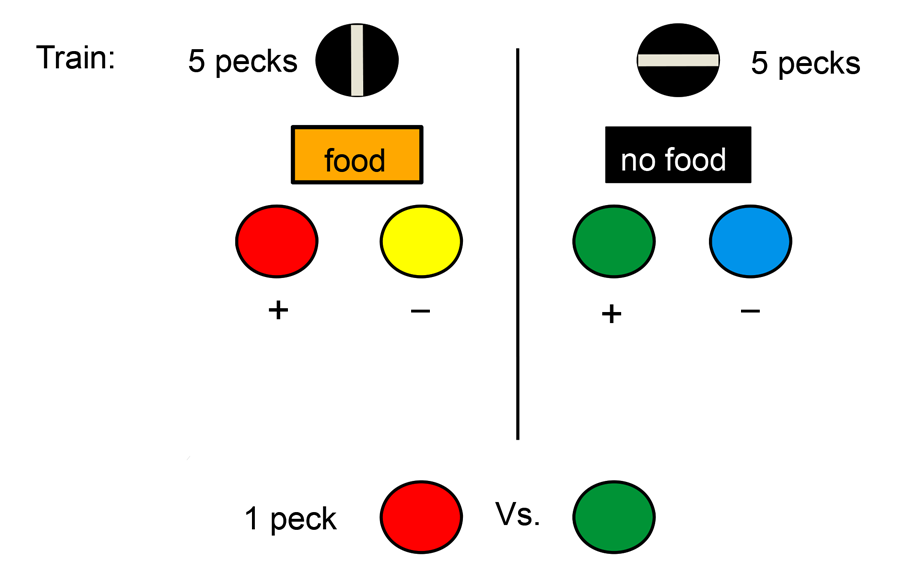

The question we asked was whether an animal would attribute greater value to a reward if greater effort was required to obtain the reward (Clement, Feltus, Kaiser, & Zentall, 2000). Although we could have looked for rewards of different but similar value and manipulated the effort required to obtain each to see if the value of the rewards had changed, instead we held the actual rewards constant and used colored lights as surrogates or conditioned reinforcers to signal the occurrence of the rewards (see design in Figure 2). More specifically, we asked pigeons to peck at a white light. On some trials, a single peck was sufficient to turn off the white light and replace it with a red light. And pecking the red light led to reinforcement. On other trials, 20 pecks were required to turn off the white light and replace it with a green light. And pecking the green light led to reinforcement. Thus, red and green lights were equally followed by the same reinforcement. To be sure that the pigeons were attending to the red and green colors, we presented them in the context of a simultaneous discrimination. Thus, when the red stimulus was presented, it was accompanied by a yellow stimulus and pecks to the red stimulus were reinforced but pecks to the yellow stimulus were not. Similarly, when the green stimulus was presented, it was accompanied by a blue stimulus and pecks to the green stimulus were reinforced but pecks to the blue stimulus were not. Following over 20 sessions of training with this procedure, when we gave the pigeons a choice between the red and green colors, the two colors associated with reinforcement, they showed a significant preference for the color that followed 20 pecks. That is, the color that had required greater effort to obtain was preferred over the color that required less effort to obtain.

Figure 2. On some trials a single peck was required to the white key and the pigeon had a choice between red and yellow stimuli (red was always correct). On other trials 20 pecks to the white key was required and the pigeon had a choice between green and blue stimuli (green was always correct). On test trials the pigeon was given a choice between the red and green stimuli.

A similar result was reported by Kacelnik and Marsh (2002) with starlings that on some trials had to make 16 flights between two perches to obtain one colored light followed by reinforcement and on other trials had to make only four flights between the two perches to obtain a different colored light followed by the same reinforcement. When given a choice between the two colored lights, similar to the results of Clement et al. (2000), the starlings preferred the color that they had had to work harder to obtain.

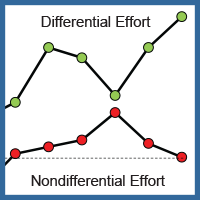

To interpret this result as evidence of cognitive dissonance or justification of effort would have seemed unparsimonious. Although animals may have beliefs, any discrepancy between those beliefs and their behavior would not likely result in dissonance. Similarly, there would be no need for a pigeon to have to justify the effort that it had to expend to obtain a green light by believing that the food signaled by the green light was better than the food signaled by the red light. Instead, we proposed that contrast between the state of the pigeon immediately preceding the appearance of the colors and the appearance of the colors might account for the value given to the color (Clement et al., 2000). Specifically, we proposed that the pigeon was likely to be in a more negative hedonic state as it came close to meeting the 20 peck requirement than when it met the single peck requirement, and the contrast produced by the appearance of the green light gave the green light its additional value (see Figure 3). After examining various contrast effects that had been studied, we called this effect within-trial contrast.

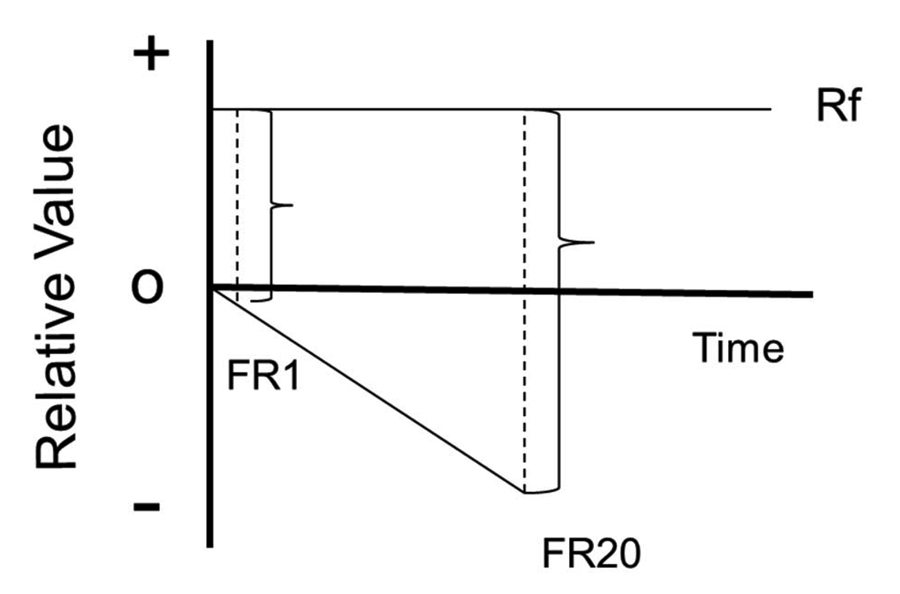

Figure 3. The contrast model of the justification of effort effect. Each trial starts with the relative value at zero. Each initial peck results in a slightly greater negative state. The appearance of the signal for reinforcement results in a larger change in state following 20 pecks than following 10 pecks.

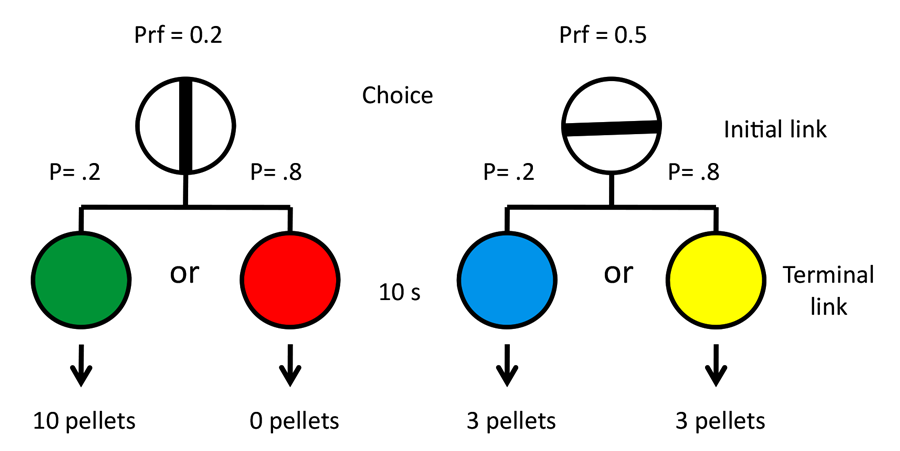

The advantage of this within-trial contrast account is that it is not specific to the differential peck requirement used by Clement et al. (2000). Because the theory is based on the relative hedonic state of the organism prior to the appearance of reinforcement or the conditioned reinforcer that signals it, the theory predicts that any relatively aversive event that precedes the colored stimuli should produce a similar preference for the stimuli that follows. To test this prediction, we asked whether differential delay would produce a similar effect (DiGian, Friedrich, & Zentall, 2004). In this experiment, on some trials, pecks to a white stimulus resulted immediately in a choice between a red stimulus and a yellow stimulus, and choice of the red stimulus was reinforced. On other trials, pecks to a white stimulus resulted in a delay of 6 s followed by a choice between a green stimulus and a blue stimulus, and choice of the green stimulus was reinforced. On test trials, when given a choice between the red and green stimuli, the pigeons showed only a weak preference for the stimulus that followed the delay. However, in a follow-up experiment we found that if we signaled to the pigeon that the delay was coming (with a vertical line on the white key) or was not coming (with a horizontal line on the white key) the preference for the color that followed the delay was much stronger (see design in Figure 4). That is, the ability of the pigeon to anticipate the delay appeared to magnify the contrast effect.

As a further test of the within-trial contrast hypothesis, we repeated the DiGian et al. (2004) experiments using food and the absence of food as the differential hedonic events (Friedrich, Clement, & Zentall, 2005). We reasoned that in the context of food on some trials the absence of food on other trials would be a relatively aversive event that might increase the attractiveness of the conditioned reinforcer that followed. Thus, one of the colors was preceded by food and the other was preceded by the absence of food (see design in Figure 5). Once again, on test trials when the pigeons were given a choice between the two conditioned reinforcers, the color that was preceded by the absence of food was preferred over the color that was preceded by food. And once again, we found that signaling that food was or was not coming with line-orientation stimuli amplified the magnitude of the effect.

When these results were presented to colleagues, behavioral ecologists who study animals in their natural environment, they were curious to know why animals might develop such preferences. That led us to speculate that if food that was found far from an animal’s home base was more valued than food found close to home, although it might not necessarily encourage the animal to travel greater distances to obtain food, it might somewhat compensate for the greater effort required to obtain that food and encourage the animal to keep looking. To test this hypothesis, one could give an animal a choice between food found close to home and food found far from home, but we chose to use an analog of that procedure in an operant box by making the pigeon work hard (30 pecks) for food at one location (e.g., the left feeder) and not work very hard (one peck) for food at a different location (e.g., the right feeder) and then determine which feeder the pigeon preferred (Friedrich & Zentall, 2004). In this experiment, we assessed each pigeon’s initial feeder preference and monitored the change in feeder preference as a function of training. We found that as training progressed, there was a significant shift toward the feeder that they had to work harder to obtain but not for a control group for which the peck requirement was uncorrelated with feeder location. Furthermore, we found that the preference developed rather slowly (over the course of 72 sessions of training; see Figure 6).

Figure 6. Shift in preference away from the preferred reinforcement location resulting from training with the 30 peck requirement to obtain food from that location (after Friedrich & Zentall, 2004).

In an interesting variation on the manipulated aversiveness of a prior event, Marsh, Schuck-Paim, and Kacelnik (2004) trained European starlings to peck a lit response key that was one color (e.g., red) on trials when they were prefed (presumably minimally hungry) and another color (e.g., green) on trials when they were not prefed (presumably more hungry). On test trials, when the starlings were given a choice between the red and green response keys (on test trials they were sometimes prefed, sometimes not prefed), they showed a significant preference for the color that in training was associated with the absence of prefeeding, and that preference was unaffected by whether they were prefed or not at the time of testing (see also Pompilio, Kacelnik, & Behmer, 2006; Vasconcelos & Urcuioli, 2008).

Results of Similar Experiments With Humans

The hypothesis that the procedure used with animals is analogous to the justification of effort phenomenon studied in humans would be strengthened if it could be shown that similar results can be found with humans using this procedure. Just such findings were reported in an experiment with children by Alessandri, Darcheville, and Zentall (2008). After training the children on some trials to obtain a simultaneous shape discrimination by clicking a computer mouse on an initial stimulus once or on other trials to obtain a different shape discrimination by clicking the computer mouse 20 times, when the children were given a choice between the two positive shapes, they showed a significant preference for the shape that required 20 clicks to obtain. Similarly, when university students were given a comparable task, they showed a very similar preference (Klein, Bhatt, & Zentall, 2005). Furthermore, when the students were asked about their rationale for choosing the positive shape that followed the 20 clicks, most of them said they just guessed and few of them even noticed the relation between the number of mouse clicks and discrimination that followed. Thus, it appears that the process underlying this contrast effect may be automatic and does not require awareness. Alessandri, Darcheville, Delevoye-Turrell, & Zentall (2008) repeated the study with university students but used differential pressure on a transducer as the prior aversive event and found similar results.

The Delay Reduction Hypothesis

An alternative account of the stimulus preference that follows the more aversive prior event is the delay reduction hypothesis proposed by Fantino and Abarca (1985). According to delay reduction theory, the value of a conditioned reinforcer depends on the degree to which it predicts reinforcement, relative to its absence. Thus, although the delay to reinforcement was the same in the presence of both conditioned reinforcers, according to delay reduction theory, it is the duration of the conditioned reinforcer relative to the total duration of the trial that determines its value. In the case of 20 pecks or a 6-s delay, the conditioned reinforcer that follows would occur relatively closer to reinforcement during the trial than a single peck or no delay; thus it should be a stronger conditioned reinforcer. Similarly, in the case of the absence of reinforcement as the relatively aversive event, the time since the prior reinforcement would have been longer; thus the conditioned reinforcement would occur relatively closer to reinforcement than when reinforcement preceded the conditioned reinforcer. The only result that appears to be inconsistent with the delay reduction account would be the result reported by Alessandri, Darcheville, et al. (2008) in which the prior event was the pressure applied to a transducer by human adults.

To distinguish between the within-trial contrast and delay reduction accounts of conditioned reinforcer preference, Singer and Zentall (2011) held the duration of the prior event constant and manipulated the schedule required to obtain the simultaneous discrimination. To obtain one simultaneous discrimination, the pigeons had to complete a modified fixed interval schedule (FI20; the first peck after 20 s produced the discrimination, modified as noted below). To obtain the other simultaneous discrimination, the pigeons had to complete a differential reinforcement of other behavior schedule (DRO20; the absence of pecking for 20 s produced the discrimination). The two schedules were alternated and were signaled by a vertical or horizontal line orientation on the key, and the duration of the FI schedule was modified to match the duration of the DRO schedule, trial by trial. To test within-trial contrast, it is important to first determine which of the two schedules is less preferred, and although it might seem obvious to some that schedules that do not require pecking would be preferred over those that require pecking, there is reason to believe that once time has been controlled for, pecking plays little role in schedule preference (Fantino & Abarca, 1985).

Interestingly, there were large individual differences in preference between the two schedules. Some pigeons preferred the FI schedule, whereas others preferred the DRO schedule, and most of the pigeons were relatively indifferent between the two schedules. Nevertheless, whatever preference the pigeons showed for the schedule, accurately predicted their preference for the conditioned reinforcer that followed, and the correlation was negative. That is, whichever schedule they less preferred, predicted their preference for the conditioned reinforcer that followed. The results of this experiment provided strong support for the within-trial contrast account of conditioned reinforcer preference found in these studies.

Failures to Replicate

Several experiments have been reported, however, that have failed to replicate the results reported by Clement et al. (2000). Although it is difficult to interpret the results of studies that fail to replicate an effect, the procedures used in these studies may identify limiting conditions under which the phenomenon can be reliably found. Vasconcelos, Urcuioli, and Lionello-DeNolf (2007) conducted several experiments under conditions that closely approximated those of Clement et al. In those experiments, pigeons were trained to criterion on the simultaneous discriminations and were then given 20 sessions of overtraining. As mentioned earlier, when Friedrich and Zentall (2004) showed that pigeons shifted their feeder preference when greater effort was required to obtain reinforcement from it and assessed feeder preference regularly throughout training, they found that 72 sessions of training were required to demonstrate a significant shift in preference relative to controls. Similarly, when Singer and Zentall (2011) manipulated the schedule (FI or DRO) prior to presentation of the simultaneous discriminations, they found that preference for the conditioned reinforcer that followed the less preferred schedule developed slowly and required 60 sessions of training before the preference was statistically significant. Thus, conditioned reinforcer preference may develop gradually with training, and the only way to know how it is developing is to monitor its progress during training.

Two experiments have been conducted, however, in which extended training was provided. The first experiment was by Vasconcelos and Urcuioli (2009) and it provided 60 sessions of overtraining. Although they found the expected preference for the conditioned reinforcer that followed the high peck requirement, the results failed to reach statistical significance (likely because of the lack of statistical power). In the second experiment, Arantes and Grace (2008) also gave extended training and failed to find a preference for the conditioned reinforcer that followed the high fixed ratio schedule. In this case, some of the pigeons previously had been used in an experiment involving relatively lean free-operant concurrent-chains schedules. As for the rest, something in their varied past histories might have led to the different results. It may be that transfer to the fixed ratio 20 schedule was not aversive enough to result in sufficient within-trial contrast to produce a significant conditioned reinforcer preference.

Another reason that a consistent preference for the conditioned reinforcer that follows the more aversive initial link has not always been found may be a function of the nature of choice given on test trials. When the pigeons are given a choice between two conditioned reinforcers, both of which have been associated with continuous reinforcement, it may be that the response strength to both may be strong enough to obscure reliable differences between them. In this regard, it is noteworthy that when pigeons have been tested for their preference between the two stimuli associated with the absence of reinforcement, the stimulus that was preceded by the higher fixed ratio was preferred and that preference was greater than the preference for its normally accompanied conditioned reinforcer (Clement et al., 2000). That is, in the case of the choice between conditioned reinforcers, the pigeons may respond impulsively to the first stimulus they see, whereas in the case of choice between the two stimuli not associated with reinforcement, choice latency is generally longer and thus the choice may be less impulsive.

Research involving the sunk cost effect and the justification of effort involve somewhat different procedures. Sunk cost involves the continuation of a response to a prior investment, whereas justification of effort involves giving added value to the stimulus that follows greater effort. However, it is possible to view justification of effort as resulting from a similar underlying mechanism. In the case of the justification of effort, one can view the greater effort (or prior relatively aversive event) as a greater investment, and for the sunk cost effect one can view the prior investment as giving greater value to the continuation of effort to complete the trial. It would be of interest to pursue the relation between these two effects in future research.

Suboptimal Choice an Analog of Human Gambling

When humans engage in commercial, unskilled gambling, they are responding suboptimally because they are choosing a low-probability, high-payoff alternative over a more optimal high-probability, low-payoff alternative (not gambling), such that the net expected return is less than what was wagered (e.g., slot machines and lotteries). Such choices can be thought of as impulsive in the sense that the gambler’s behavior suggests a failure to consider the long-term consequences of the decision. In fact, research has shown that patterns of decision making in pathological gamblers are marked by a preference for immediate gratification or relief from states of deprivation relevant to their addiction, despite negative long-term consequences (Yechiam, Busemeyer, Stout, & Bechara, 2005).

Recent research suggests that decision making depends on two different sources of input, primary processes governed by relatively simple associative learning that typically occurs impulsively, often without awareness, and secondary processes comprised of what we normally think of as thought processes, the conscious effort to consider possibilities, and an attempt to resolve dilemmas (Dijksterhuis, 2004; Evans, 2003; Kahneman, 2013; Klaczynski, 2005). It is widely held that nonhuman animals are thought to rely on primary decision processes associated with more primitive areas of the brain. Interestingly, pathological gamblers are also thought to arrive at decisions through the use of more primitive areas of the brain (Potenza, 2008). Thus, research with humans suggests that problem gambling, involving games of chance (rather than skill), involves automatic processes that also may apply to other species.

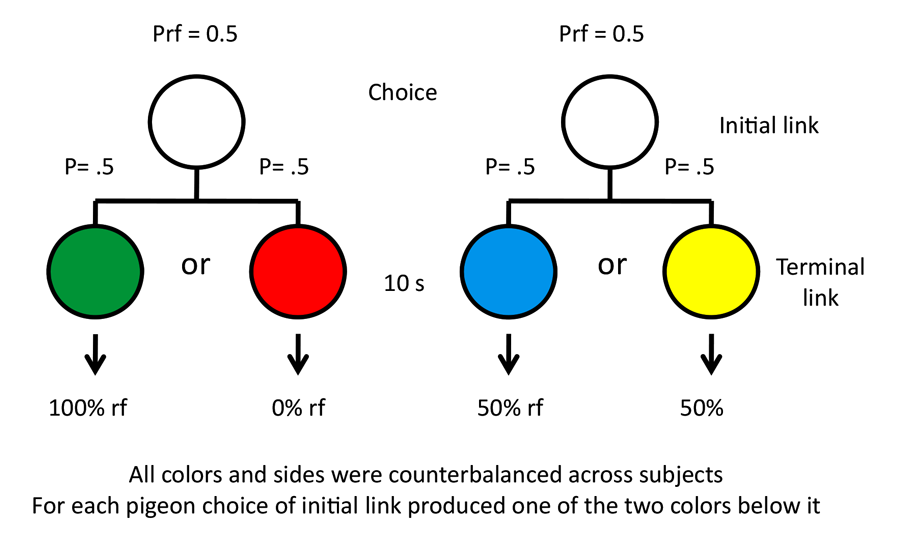

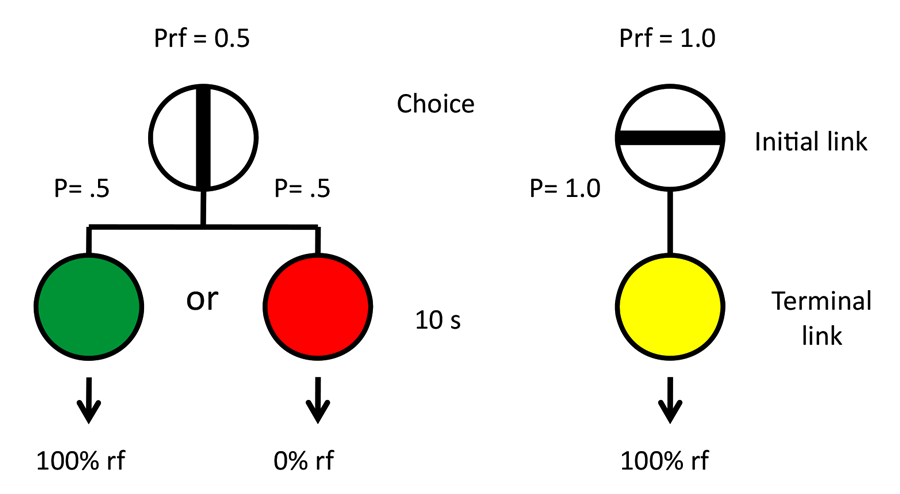

Our research into suboptimal choice began with a simpler question involving stimulus bias. The question we asked was whether pigeons would prefer an alternative that provided them with discriminative stimuli over nondiscriminative stimuli, if the probability of reinforcement was equated (Roper & Zentall, 1999). If they chose the discriminate stimulus alternative, they would receive, for example, a green stimulus 50% of the time, which was always followed by reinforcement, or a red stimulus 50% of the time, which was followed by the absence of reinforcement. If they chose the nondiscriminative stimulus alternative, they would receive, for example, a yellow or blue stimulus, each followed by reinforcement 50% of the time (the design of this experiment appears in Figure 7). Thus, the two alternatives each provided 50% reinforcement but in a sense we were asking whether pigeons would prefer information (the red or green stimulus) over the absence of information (the yellow or blue stimulus), and the answer was clearly that they showed a strong preference for the discriminative stimuli. Similar results have been reported by Fantino (1977) with pigeons, by Prokasy (1956) with rats, and by Bromberg-Martin and Hikosaka (2009) with monkeys.

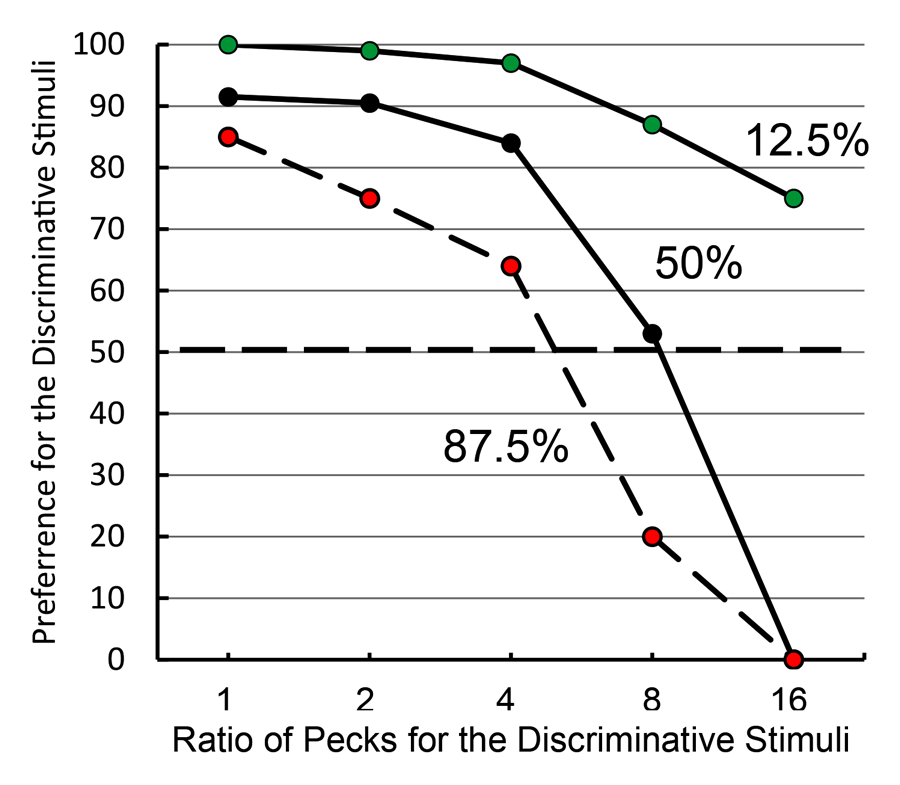

As part of our experiment (Roper & Zentall, 1999, Experiment 1), we tested an important prediction of information theory: that the degree of preference should depend on the amount of information provided by the discriminative stimuli. Thus, information provided by the discriminative stimulus should be maximal when the probability of reinforcement was 50%. If at the time of choice, the probability of reinforcement was either greater than 50% or less than 50%, the discriminative stimuli would provide less information and the preference for that alternative should be reduced. This should be true because in both cases the discrepancy between what was expected at the time of choice and what occurred following choice (upon the appearance of the discriminative stimuli) would be less as the overall probability of reinforcement deviated from 50%.

In keeping with information theory, when the overall probability of reinforcement was increased to 87.5% (i.e., the green stimulus that predicted reinforcement occurred most of the time) and both the yellow and blue stimuli were associated with 87.5% reinforcement, the pigeons showed less of a preference for the discriminative stimulus alternative. However, when the overall probability of reinforcement was decreased to 12.5% (i.e., the green stimulus that predicted reinforcement occurred very seldom) and both the yellow and blue stimuli were associated with 12.5% reinforcement, preference for the discriminative stimulus alternative actually increased (see Figure 8). Thus, the symmetry predicted by information theory was not found. This finding led us to ask how much the pigeons would be willing to work for the discriminative stimuli if a single peck was required to obtain the nondiscriminative stimuli (Roper & Zentall, 1999, Experiment 2). What we found was that pigeons for which the overall probability of reinforcement was 50% became indifferent between the two alternatives when the ratio of responses (discriminative stimulus vs. nondiscriminative stimulus) was 8:1. When the overall probability of reinforcement was 87.5%, the pigeons were indifferent between the two alternatives when the ratio of responses was about 4.5:1; and when the overall probability of reinforcement was 12.5%, the pigeons still preferred the discriminative stimulus alternative when the ratio of responses was 16:1.

Figure 8. Test of information theory (after Roper & Zentall, 1999). Pigeons were given a choice between 1 peck to obtain the nondiscriminative stimuli or an increasing number of pecks (from 1 to 16, in blocks of 2 sessions) to obtain the discriminative stimuli. The different groups represent the overall probability of reinforcement associated with both alternatives.

The results of these experiments suggested that the discrepancy between what was expected and the signal for reinforcement (“good news”) was more important than the discrepancy between what was expected and the signal for non-reinforcement (“bad news”). These results were consistent with what had been reported earlier by McDevitt, Spetch & Dunn (1997) who found that inserting a gap between the choice response and the terminal link S– had little effect on choice, whereas inserting a similar gap between choice and the suboptimal terminal link S+ resulted in a strong preference for the optimal alternative. Similarly, Spetch et al. (1994) found that increasing the duration of the suboptimal terminal link S+ resulted in a strong preference for the optimal alternative, whereas increasing the duration of the suboptimal terminal link S– had little effect on choice of the suboptimal alternative.

These results led us to ask whether pigeons would be willing to forgo food to obtain discriminative stimuli. There was already some evidence in the literature that they would, but the results of those experiments was mixed (Belke & Spetch, 1994; Fantino, Dunn, & Meck, 1979; Mazur, 1996; Spetch, Belke, Barnet, Dunn, & Pierce, 1990; Spetch, Mondloch, Belke, & Dunn, 1994). In those experiments, pigeons were given a choice between an alternative that provided a stimulus associated with 100% reinforcement (the optimal choice) and an alternative that 50% of the time provided a stimulus associated with 100% reinforcement and 50% of the time provided a stimulus associated with the absence of reinforcement (the suboptimal choice). In each experiment, several of the pigeons preferred the suboptimal alternative, however, others preferred the optimal alternative.

Originally, we thought that the difference between 50% reinforcement and 100% reinforcement may have been too great to observe consistent preferences, and we asked if we could get a more consistent preference for the suboptimal alternative by decreasing the value of the optimal alternative from 100% to 75% reinforcement (Gipson, Alessandri, Miller, & Zentall, 2009; see design in Figure 9). Although there were still individual differences, as a group the pigeons showed a significant preference for the suboptimal alternative.

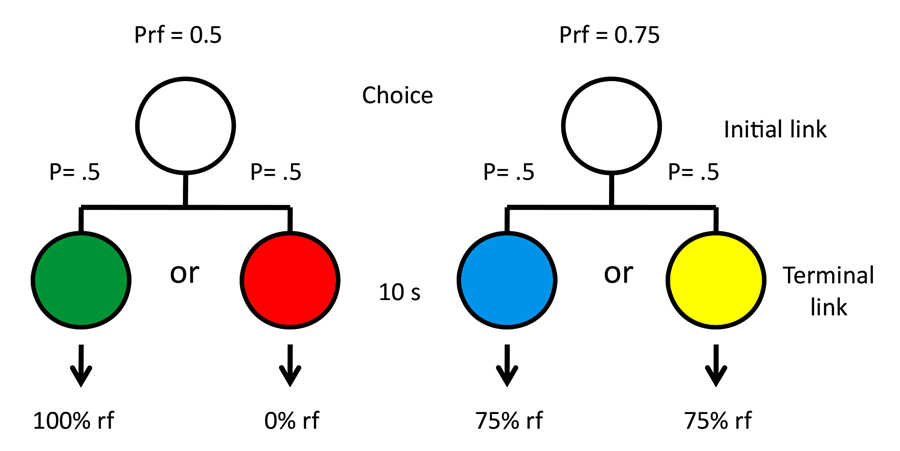

The results of the Gipson et al. (2009) study, together with the results of our earlier study in which the overall probability of reinforcement was reduced to 12.5% (Roper & Zentall, 1999), suggested that we might be able to get a more pronounced suboptimal choice effect if we reduced the probability of reinforcement associated with the suboptimal choice alternative below 50%. Stagner and Zentall (2010) tested this hypothesis with a design in which in 20% of the trials, choice of the suboptimal alternative led to a predictor of reinforcement and in 80% of the trials, choice of the suboptimal alternative led to a predictor of non-reinforcement, whereas choice of the optimal alternative led to a predictor of reinforcement 50% of the time. The design is similar to that presented in Figure 9 except the probability of reinforcement associated with the suboptimal alternative was reduced to 20% and the probability of reinforcement associated with the optimal alternative was reduced to 50%. Thus, choice of the optimal alternative provided 2.5 times as much reinforcement as choice of the suboptimal alternative. In spite of this difference in the probability of reinforcement, the pigeons showed a consistently strong (better than 90%) preference for the suboptimal alternative. Furthermore, the suboptimal preference depended entirely on the discriminative stimuli that followed its choice because when both stimuli that followed the suboptimal choice predicted 20% reinforcement, the pigeons showed a strong (almost 90%) preference for the optimal alternative.

Stagner and Zentall (2010) demonstrated that a strong consistent preference for the suboptimal alternative could be found, but it is possible that the preference was at least partially determined by the unpredictability of reinforcement for choices of the optimal alternative. To test this hypothesis, we conducted an experiment in which we manipulated the magnitude of reinforcement rather than the probability of reinforcement (Zentall & Stagner, 2011a). In this experiment in 20% of the trials, choice of the suboptimal alternative led to a stimulus that predicted 10 pellets of food, whereas in 80% of the trials, choice of the suboptimal alternative led to a stimulus that predicted zero pellets. Choice of the optimal alternative always led to a stimulus that predicted three pellets of food (see design in Figure 10). Thus, choice of the optimal alternative resulted in three pellets, whereas choice of the suboptimal alternative led to an average of two pellets. Once again, a strong preference for the suboptimal alternative was found. Monkeys, too, show a similar effect when they prefer discriminative stimuli over nondiscriminative stimuli even when the discriminative stimuli predict less reinforcement on average than the nondiscriminative stimuli (Blanchard, Hayden, & Bromberg-Martin, 2015).

An alternative account of the Zentall and Stagner (2011a) experiment is that the pigeons chose suboptimally because they preferred the variable outcome (10 pellets vs. no pellets) more than the fixed outcome. To test this hypothesis, Zentall and Stagner made the probability of reinforcement associated with the discriminable stimuli the same (i.e., 20% of the time both stimuli associated with the suboptimal alternative were followed by 10 pellets), and now the pigeons consistently chose the optimal alternative. Thus, the variability of reinforcement given choice of the suboptimal alternative was not responsible for the suboptimal choice.

It appears that the predictive value of the conditioned reinforcer is what is responsible for the preference for the suboptimal alternative. In fact, it occurred to us that there may be something special about the certainty of the predictive value of the suboptimal alternative’s conditioned reinforcer. This phenomenon has come to be known as the Allais paradox (Allais, 1953). The Allais paradox can be illustrated by the following example: If humans are given a choice between a 100% chance of being given $3 and an 80% chance of being given $4, most subjects will prefer the suboptimal certainty of the $3. However, if they are given a proportionally similar choice between a 25% chance of being given $3 and a 20% chance of being given $4, most subjects will prefer the more optimal $4 choice. Although in humans this effect can be reduced with practice, it can be maintained when the discrimination is made more difficult (Shafir, Reich, Tsur, Erev, & Lotem, A., 2008).

To determine whether the Allais paradox might apply to pigeons, we asked whether pigeons would cease to prefer the suboptimal alternative if the conditioned reinforcer that followed that choice was followed by reinforcement only 80% of the time that it occurred (Zentall & Stagner, 2011b). Although the preference for the suboptimal alternative was somewhat reduced, the pigeons still showed better than an 80% preference for the suboptimal alternative. Thus, the suboptimal preference does not depend on the certainty of the predictive value of the conditioned reinforcer.

Choice of the suboptimal alternative does not appear to be rational. On the one hand, the lower the probability of the occurrence of the conditioned reinforcer, the stronger the preference is for that alternative (Roper & Zentall, 1999; Zentall & Stagner, 2011a). Although this preference would seem to be increasingly suboptimal, it is consistent with a prediction of the delay reduction hypothesis (Fantino & Abarca, 1985) because the less frequent the occurrence of the conditioned reinforcer is, the better it predicts reinforcement when it does occur, compared to when it is absent. But the less frequent the conditioned reinforcer is, the more frequent is the stimulus associated with the absence of reinforcement (the presumed conditioned inhibitor); and the more frequent the stimulus that predicts the absence of reinforcement is, the less the preference should be for the suboptimal alternative. Apparently, the stimulus that predicts the absence of reinforcement does not function as a conditioned inhibitor (see Spetch et al., 1994).

A more direct test of the conditioned inhibitory value of the stimulus that predicts the absence of reinforcement was provided by Laude, Stagner, and Zentall (2014). They used the design of Zentall and Stagner (2011a; see Figure 10) but substituted a black vertical line on a white background for the red stimulus that was associated with the absence of reinforcement. To test for inhibition, on probe trials they used a combined cue test (superimposing a presumed conditioned inhibitor on a known conditioned reinforcer, see Hearst, Besley, & Farthing, 1970) and tested the pigeons early in training, before a preference for the suboptimal alternative was observed, and later in training, after the pigeons were showing a preference for the suboptimal alternative. Early in training, Laude et al. found strong evidence for conditioned inhibition; however, later in training the evidence for inhibition was substantially reduced. These results support the hypothesis that the negative value of the stimulus that predicts the absence of reinforcement loses its effect with training.

The results of this line of research lead to a surprising conclusion. It is the value of the conditioned reinforcer that determines preference for each of the alternatives rather than their frequency, and the negative value of the stimulus that predicts the absence of reinforcement comes to play little role in that preference. Thus, the pigeons choose the alternative with the conditioned reinforcer that has the greatest value, independent of its frequency. To see how this predicts the results of the suboptimal choice experiments, we need to reconsider their designs. In the Gipson et al. (2009) experiment, the pigeons chose suboptimally because they preferred the signal for 100% reinforcement that follows, over the optimal alternative that provides a signal for 75% reinforcement. In the Zentall and Stagner (2011a) experiment, the pigeons showed a stronger suboptimal choice because they preferred the signal for 100% reinforcement that followed the suboptimal choice, over the signal for 50% reinforcement that followed the optimal choice. In the Zentall and Stagner (2011a) experiment the pigeons choose suboptimally because they preferred the signal for 10 pellets that followed the suboptimal choice, over the signal for three pellets that followed the optimal choice. We call this the conditioned reinforcer value hypothesis.

That choice appears to be governed by the value of the conditioned reinforcers rather than their frequency, and that stimuli associated with the absence of reinforcement play little role in that choice, encouraged us to reconsider the results of earlier research (Belke & Spetch, 1994; Fantino, Dunn, & Meck, 1979; Mazur, 1996; Spetch et al., 1990; Spetch et al., 1994) in which large individual differences in pigeons’ choice of the 100% reinforcement and 50% reinforcement were found. In all of those experiments, both alternatives had conditioned reinforcers that predicted reinforcement 100% of the time. According to the conditioned reinforcer value hypothesis, the pigeons should have been indifferent between the two alternatives, but in general they were not indifferent. Most of the pigeons showed a strong preference for one of the two alternatives. It should be noted, however, that in all of those experiments, the two alternatives were defined by their spatial location. That is, any natural spatial preference would be confounded with a preference for one alternative or the other. One possibility is that the pigeons were in fact indifferent between the two alternatives but they reverted to a spatial preference that appeared as a preference for one alternative or the other. However, that spatial preference tended to be idiosyncratic. Such a possibility was actually suggested by Spetch et al. (1994) and by Mazur (1996).

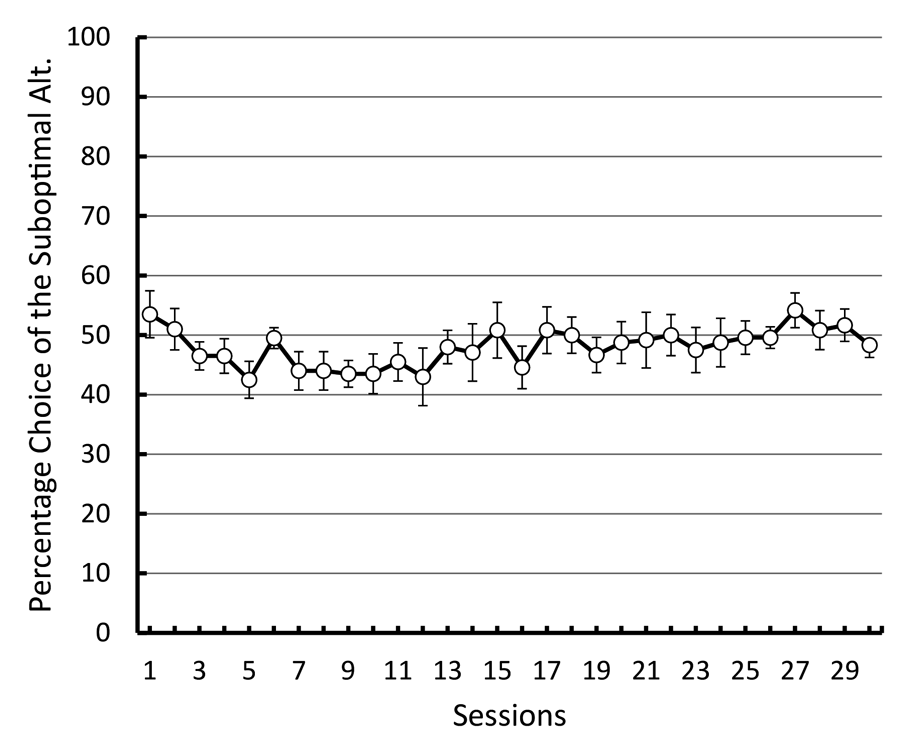

To test this hypothesis, we repeated the basic design of those experiments giving pigeons a choice between an alternative that resulted in discriminative stimuli, one presented in half of those trials that predicted reinforcement 100% of the time and the other presented in the remaining trials that predicted the absence of reinforcement (50% reinforcement overall) and an alternative that resulted in a stimulus that predicted reinforcement 100% of the time (Smith & Zentall, in press). But instead of defining the alternatives by their spatial location, we used shapes that varied in location from left to right to define the alternatives (see Figure 11). If the pigeons were indifferent between the two alternatives and they defaulted to a spatial preference, it would appear as indifference because the shapes would appear randomly on the left and right. In fact, the pigeons were indifferent between the two alternatives. To verify that the pigeons were able to discriminate between the shapes, we equated the reinforcement associated with the two stimuli that followed choice of the suboptimal alternative (both stimuli were followed by reinforcement 50% of the time) while maintaining the overall difference in reinforcement between choice of the suboptimal (50% reinforcement) and optimal (100% reinforcement) alternatives. Now the pigeons began to choose optimally. The results of this experiment were consistent with the hypothesis that the pigeons judge the value of the conditioned reinforcer that follows choice of that alternative and choose that alternative relatively independent of the frequency of the conditioned reinforcer (see Figure 12). Furthermore, it appears that the presumed conditioned inhibitor does not function to reduce the preference for that alternative.

Figure 12. Preference for the discriminative 50% reinforcement alternative over the 100% reinforcement alternative (after Smith & Zentall, submitted).

It should be noted that the conditioned reinforcer value hypothesis may not account for all of the findings that have been reported. According to this hypothesis, with the procedure used by Spetch et al. (1990) and others, the frequency of optimal and suboptimal preferences should be equal, but several experiments have reported that more pigeons preferred the suboptimal alternative (Belke & Spetch, 1994; Dunn & Spetch, 1990). Furthermore, when the spatial locations of the optimal and suboptimal alternatives were reversed, several of the pigeons reversed their preference. Had the preference reflected indifference for the two alternatives, the pigeons would not have reversed their preference. Finally, as already noted, when the appearance of the conditioned reinforcer associated with the suboptimal alternative was delayed by 5 s, it resulted in a strong preference for the optimal alternative, whereas when the conditioned reinforcer associated with the optimal alternative was delayed by 5 s, it had much less effect on initial link choice. Thus, when pigeons perform this task with equal-valued conditioned reinforcers, they may not always be completely indifferent between the two alternatives. When the suboptimal link is chosen, the change in value between the initial link and the terminal link may result in delay reduction (Spetch et al., 1994) or contrast (Stagner & Zentall, 2010), which would not be present when the optimal link is chosen.

The finding that the value of the conditioned reinforcers in the terminal links can account in large part for the initial link preferences should in no way detract from the suboptimality of the initial link choice. Even when the pigeons are indifferent between the 50% and 100% reinforcement initial link alternatives (Smith & Zentall, in press; Stagner, Laude, & Zentall, 2012), they are choosing the suboptimal alternative 50% of the time, whereas they always should be choosing the optimal alternative.

A Good Analog of Human Gambling Behavior?

To what extent are the results of these analog gambling experiments with pigeons consistent with what we know about human gambling behavior? Consistent with the conclusion that with the pigeon procedure there is minimal inhibition associated with stimuli associated with the absence of reinforcement, it has been suggested that humans who gamble overstate their wins and underestimate their losses (Blanco, Ibañez, Sáiz-Ruiz, Blanco-Jerez, & Nunes, 2000), and Ladouceur, Mayrand, and Tourigny (1987) suggest that prolonged exposure to gambling may be associated with cognitive distortions and irrationality about the outcomes of their bets.

This may also explain why, for many gamblers, losing has little effect on the future probability of gambling (until one runs out of money). For example, there is generally a large increase in the number of lottery tickets sold when the value of the winning ticket increases, whereas it is not clear that variability in the probability of winning plays an important role in the number of tickets sold. Although one could argue that the concept of gambling odds is difficult for most of us to fully understand, problem gamblers who should have direct experience with the relation between odds and losing do not appear to be greatly affected by that experience. There is also evidence that problem gamblers show reduced sensitivity to aversive conditioning (Brunborg et al., 2010) and aversive conditioning should serve to inhibit the behavior that produced it.

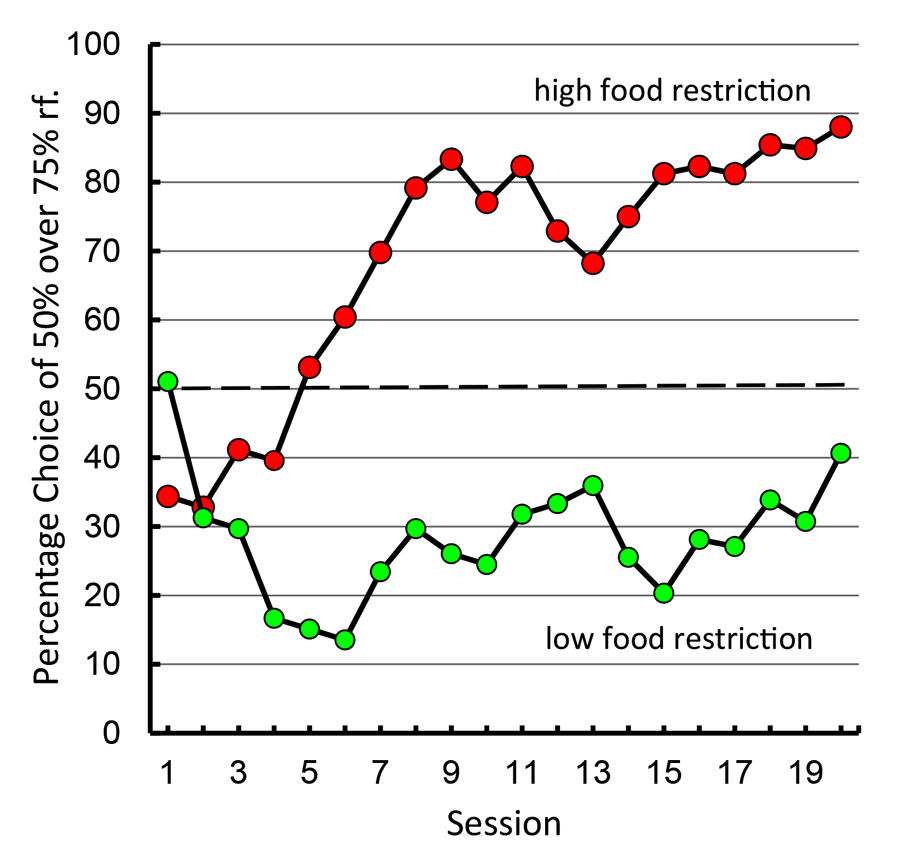

The Effect of Deprivation on Suboptimal Choice

A paradoxical demographic of human gambling behavior is that people with higher needs (those of lower socioeconomic status) tend to gamble proportionally more than those of higher socioeconomic status (Lyk-Jensen, 2010; Worthington, 2001). If our pigeon model of suboptimal choice is a good analog of human gambling behavior, the level of pigeons’ food motivation should predict their degree of suboptimal choice. Laude, Pattison, and Zentall (2012) tested this hypothesis and found support for the relationship. They found that pigeons that were minimally food restricted (just motivated enough to participate in the experiment) had a strong preference for the optimal alternative, whereas those that were normally food restricted showed the typical suboptimal choice (see Figure 13).

Figure 13. Preference for 50% over 75% reinforcement alternative under high versus low levels of food restriction (after Laude, Pattison, & Zentall, 2012).

This finding would appear to be inconsistent with optimal foraging theory (Stephens & Krebs, 1986) because animals should have evolved in such a way as to maximize their net energy input, while expending the least amount of energy in doing so. To account for these findings, one might appeal to models of risk-sensitive foraging (Stephens, 1981). Specifically, if an animal is on a negative energy budget such that the rate of energy gain is not sufficiently high for the animal to survive with the smaller but more frequent option (e.g., the reliable three pellet option) the animal’s only option may be for it to be risk prone and gamble on the variable option (10 pellets, 20% of the time). For example, Caraco (1981) found that juncos that were on a negative energy budget were risk prone (preferred variable rewards), whereas those that were on a positive energy budget preferred the constant reward. But as Kacelnik and Bateson (1996) suggested, for pigeons trained under the present high-restriction conditions, in which generally the majority of their daily ration is received in the experiment, the rate of food intake experienced is likely to be sufficient to result in a positive energy budget. Furthermore, food restrictions resulting in a negative energy budget would apply primarily to small birds with a high rate of metabolism that would likely die overnight unless they were risk prone, rather than to larger birds such as pigeons, which can go several days without food.

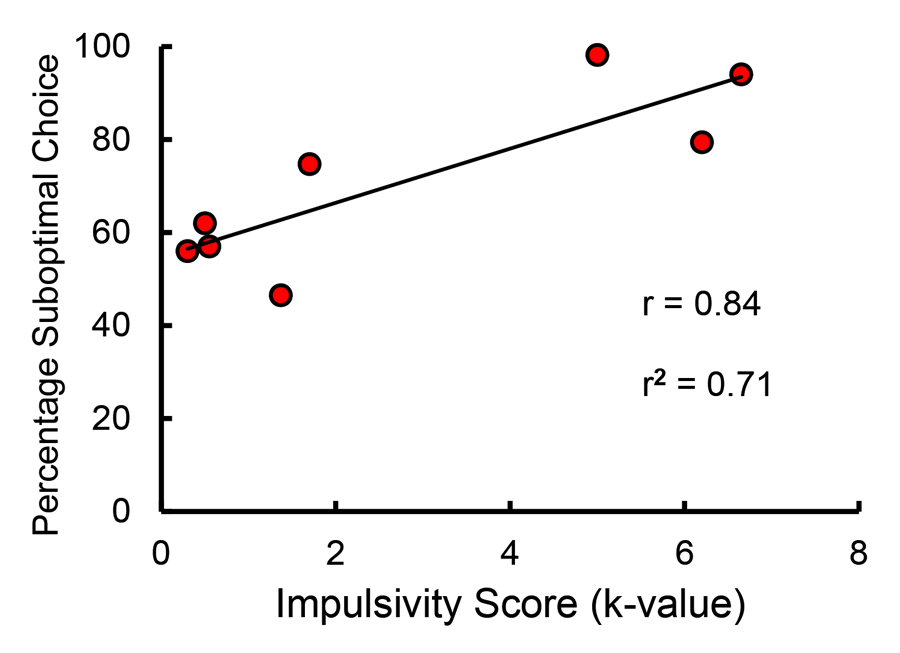

The mechanism responsible for the difference in suboptimal choice as a function of food restriction is likely to be impulsivity. Impulsivity has been proposed to be associated with human suboptimal choice involved in gambling (Michalczuk, Bowden-Jones, Verdejo-Garcia, & Clark, 2011; Nower & Blaszczynski, 2006). Impulsivity has been defined as the inability to delay reinforcement, and it has been assessed by way of delay discounting tasks in which an organism is given a choice between a small immediate reinforcer and a larger delayed reinforcer. The delay at which the organism is indifferent between the two alternatives defines the slope of the discounting function and the degree of impulsivity. Thus, impulsive individuals require that the delay to the larger reinforcer be relatively short before they will prefer it, and thus for them the slope of the discounting function would be relatively steep. We have recently found that the slope of the delay discounting function for pigeons is a good predictor of the degree to which they prefer the suboptimal choice in the suboptimal choice task (Laude, Beckmann, Daniels, & Zentall, 2014; see Figure 14).

Figure 14. Correlation between impulsivity (as measured by delay discounting) and suboptimal choice (after Laude, Beckmann, Daniels, & Zentall, 2014).

The Effect of Environmental Enrichment on Suboptimal Choice

There is some suggestion from research with rats that various extra-experimental environmental factors such as social and physical enrichment can affect a rat’s propensity to self-administer drugs of addiction (Stairs & Bardo, 2009). Rats that are housed in an enriched group environment (a large cage with other rats and objects that are changed regularly) show a significantly reduced tendency to self-administer drugs than rats that are normally (individually) housed. The mechanism responsible for the reduced self-administration of drugs by environmental enrichment appears to be a reduction in impulsive behavior (Perry & Carroll, 2008) as well as the reduced effectiveness of conditioned reinforcers (Jones, Marsden, & Robbins, 1990). Impulsivity has also been implicated in human gambling behavior (Steel & Blaszczynski, 1998), and, as already noted, conditioned reinforcement has been proposed to account for suboptimal choice by animals (Dinsmoor, 1983). Furthermore, there is evidence that similar physiological mechanisms underlie compulsive gambling and drug addiction (Potenza, 2008).

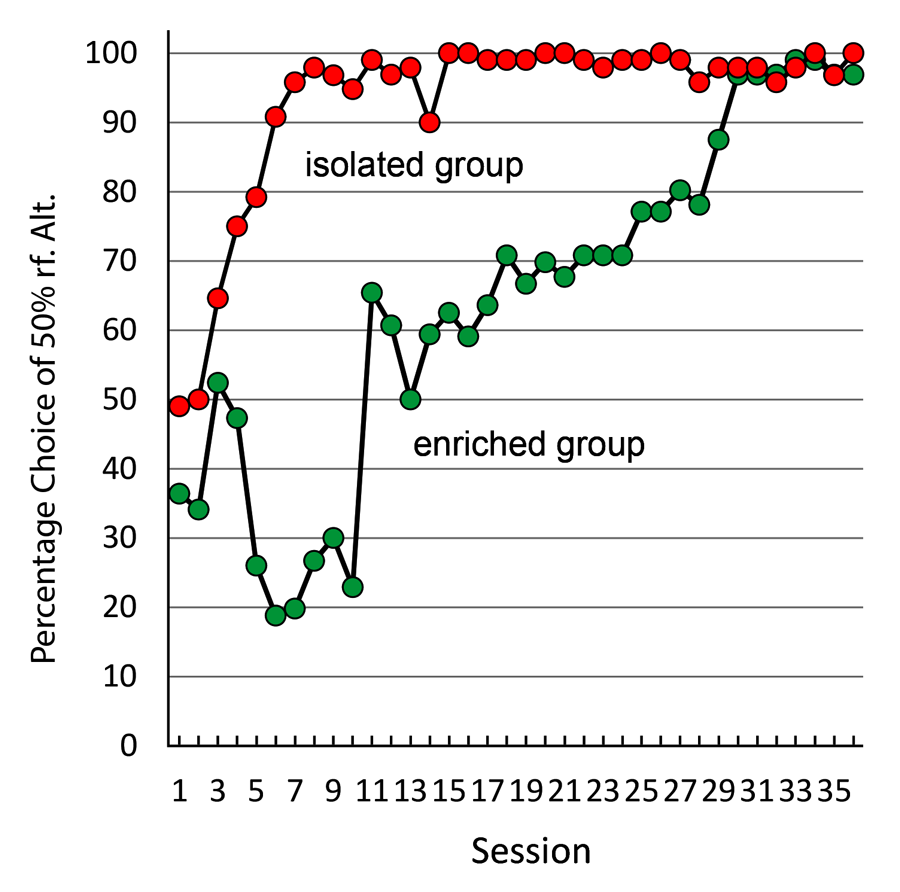

In an attempt to determine the effect of housing on suboptimal choice, we gave one group of pigeons experience in an enriched environment (a large cage with four other pigeons for 4 hours a day), while the control pigeons remained in their normal one-to-a-cage housing (Pattison, Laude, & Zentall, 2013). When we exposed the pigeons from both groups to the suboptimal choice task, we found that the normally housed pigeons showed the typical suboptimal choice, whereas the enriched pigeons chose optimally for several sessions and then were much slower to begin to choose suboptimally (see Figure 15). Thus, enriched housing appears to have an effect on suboptimal choice, although that effect may be only temporary. These findings may have implications for the treatment of problem gambling behavior by humans. If these results can be generalized, they imply that exposing human gamblers to an environment that is socially and physically enriched may reduce their attraction to gambling.

Figure 15. Preference for 50% over 75% reinforcement alternative for pigeons given 4 hr a day group-cage experience versus normally housed pigeons (after Pattison, Laude, & Zentall, 2013).

Analysis of Gambling and Suboptimal Choice

To explain why pigeons prefer discriminative stimuli over nondiscriminative stimuli, Dinsmoor (1983) proposed that animals are attracted to conditioned reinforcers, but the stimuli associated with 0% reinforcement should result in conditioned inhibition, and in the case of 20% reinforcement those non-reinforced trials occur four times as often. We now know that although Dinsmoor ignored conditioned inhibition, he was probably correct to do so because the presumed conditioned inhibitors are relatively ineffective, even when they occur four times as often as the conditioned reinforcer. Furthermore, we now know that the frequency of the conditioned reinforcer is relatively unimportant as well (Stagner et al., 2012). That is, the probability of winning is relatively unimportant. This finding, as well, has implications for human gambling behavior. If the probability of the appearance of the conditioned reinforcer is relatively unimportant, it provides a plausible reason for why humans gamble when the odds of losing are very high (lotteries). Furthermore, those who run casinos and lotteries have found a way to get people to gamble, even though they may very rarely win, by drawing attention to winning by others (bells ringing and lights flashing when there is a slot-machine winner in a casino and an announcement on TV when there is a lottery jackpot winner). By doing this, they make it appear that winning is much more likely than it is (the availability heuristic; Tversky & Kahneman, 1973).

Why the relative unimportance of losing exists in humans and other animals is not clear, but in nature it may have had an evolutionary adaptive value. If food is scarce, there may be many more failures than successes to find food. But developing inhibition to searching, generally would not be adaptive. Thus, in nature, it may be more adaptive to disregard or at least de-emphasize losses.

A second reason that animals may be attracted to low-probability but high-valued outcomes is that their attraction, which generally takes the form of approach behavior, is likely to have an effect on later outcomes. As an animal approaches the edge of a foraging patch, it may occasionally encounter a high-valued outcome, but entering the patch may result in an increase in the probability of those outcomes. Thus, in nature, attraction to a low-probability but high-valued outcome may increase that probability. That would not be true, of course, in commercial gambling, where the probability of reinforcement is independent of prior choices.

Treatment Implications

The present research suggests that one approach to the treatment of problem gambling may be to make wins less salient and, perhaps more important and easier to accomplish, make losses more salient. The present research also suggests that changes in environment conditions may affect gambling. It may be difficult to overcome the greater tendency to gamble by those humans with lower socioeconomic status because, although the real cost of gambling for those people is relatively higher than for those with higher socioeconomic status, the possibility of winning a jackpot would presumably represent a greater improvement in lifestyle for those who are poor. Alternatively, it may be possible to affect the tendency to gamble by making other changes in the environment. Although it is not clear whether problem gamblers spend as much time as they do gambling because they have few outside interests or that problem gambling results in having few outside interests, however, the finding with pigeons that the manipulation of environmental enrichment can reduce the attraction to the suboptimal alternative suggests the possibility that exposing humans to other enriching activities may also serve to reduce their attraction to gambling.

Conclusion

The fact that several examples of bias and suboptimal choice by humans can also be found in pigeons suggests that the mechanisms responsible for those choices by humans do not depend on culture (in the case of sunk cost, “don’t waste”) or complex cognitions (in the case of justification of effort, the need to be consistent in beliefs and actions) or even its entertainment value (in the case of gambling, “it’s fun”). Instead, these behaviors appear to be associated with basic behavioral processes that are likely to have evolved because they have had adaptive value in natural environments.

References

Alessandri, J., Darcheville, J.-C., Delevoye-Turrell, Y., & Zentall, T. R. (2008). Preference for rewards that follow greater effort and greater delay. Learning & Behavior, 36, 352–358. doi:10.3758/LB.36.4.352

Alessandri, J., Darcheville, J.-C., & Zentall, T. R. (2008). Cognitive dissonance in children: Justification of effort or contrast? Psychonomic Bulletin & Review, 15, 673–677. doi:10.3758/PBR.15.3.673

Allais, M. (1953). Le comportement de l’homme rationnel devant le risque: critique des postulats et axiomes de l’école Américaine, Econometrica, 21, 503–546.

Arantes, J., & Grace, R. C. (2008). Failure to obtain value enhancement by within-trial contrast in simultaneous and successive discriminations. Learning & Behavior, 36, 1–11. doi:10.3758/LB.36.1.1

Ariely, D. (2010). Predictably irrational: The hidden forces that shape our decisions. New York: Harper Perennial.

Arkes, H. R., & Ayton, P. (1999). The sunk cost and Concorde effects: Are humans less rational than lower animals? Psychological Bulletin, 125, 591–600. doi:10.1037/0033-2909.125.5.591