Clarifying Contrast, Acknowledging the Past, and Expanding the Focus

Reading Options

Abstract

This commentary highlights, clarifies, and questions various historical and theoretical points in Thomas Zentall’s review “What Suboptimal Choice Tells Us About the Control of Behavior.” Particular attention is paid to what Zentall refers to as the “unskilled gambling” paradigm. We acknowledge additional contributions to the study of suboptimal choice and clarify some theoretical issues foundational to a behavioral approach. We also raise important questions about Zentall’s use of the concept of “contrast,” how it is related to previous contrast research, and how it fails to extend a very similar explanation that predates it.

Keywords: suboptimal choice, conditioned reinforcement, contrast

Zentall’s (2019) review sets out to describe “what suboptimal choice tells us about the control of behavior.” It is useful to have different types of suboptimal behavior discussed in one article, and although we are grateful for the focus on suboptimality, our commentary focuses on a few difficulties with his assessment. At the outset, the historical framework for suboptimal choice appears to have been recast for the purposes of this review. For example, Zentall writes,

Those of us who study the behavior of animals assume that they have evolved to maximize their success (e.g., at finding food) and much of learning theory (Skinner, 1938; Thorndike, 1911) is based on this premise. Animals select those responses that lead to the increased probability of reinforcement over those that do not. When animals’ behavior is consistent with this theory, it strengthens our belief in the validity of the theory. (p. 1)

The assumption that animals “have evolved to maximize their success (e.g., at finding food)” (p. 1) is independent of behaviorist views on learning and not, as Zentall asserts, foundational to it. Neither of the two classic works he cites (Skinner, 1938; Thorndike, 1911, both digitally archived in the public domain) defines behavior in terms of any kind of maximization. Moreover, the subsequent claim that “animals select those responses that lead to the increased probability of reinforcement” (p. 1) is a further distortion of the behaviorist position. On both a phylogenetic and ontogenetic level, it is the environment, not the organism, that is selecting behavior (Baum, 2008; Skinner, 1981). To suppose otherwise would require the invocation of para-mechanistic hypotheses and theories (e.g., intentionality). In this context, Zentall’s use of the term reinforcement seems more akin to a layperson’s conception of reward. For Skinner (1953, p. 72), reinforcement is a functional description of an environment–behavior relation, not a theory to be falsified. If a pigeon learns to select an option that leads to food 20% of the time instead of an option that leads to food 50% of the time, then that 20% option is, by definition, the more reinforcing of the two. The question for researchers is, why is it the more reinforcing of the two (i.e., what are the controlling variables)? We emphasize these distinctions not only for reasons of historical accuracy but because a functional analysis underlies the explanation of suboptimal choice discussed here.

Although Zentall’s review does provide a context for the summary of recent work in his laboratory, a reader may wish to look elsewhere for an introduction to a more general understanding of the phenomena described in this review and in the earlier article (Zentall, 2016). One example most relevant to our work is the treatment of what is referred to as “unskilled gambling” in both articles. A reader could be forgiven for believing that the study of this form of suboptimal choice began recently, but it should be made clear that much of Zentall’s own work described in the present review replicates and, at times, extends much earlier work. The initial investigation of suboptimal choice (Kendall, 1974) built on the study of observing behavior (e.g., Wilton & Clements, 1971). Kendall’s work survived considerable skepticism from contemporaries (see, e.g., Fantino, Dunn, & Meck, 1979). The phenomenon has since been studied extensively by others (see McDevitt, Dunn, Spetch, & Ludvig, 2016, and Vasconcelos, Machado, & Pandeirada, 2018, for reviews from learning and optimal foraging perspectives, respectively).

The review describes three phenomena covered in an earlier review and three additional phenomena. Zentall concludes his review by stating that “in all six of the examples of suboptimal or biased choice by pigeons presented in the present article together with those presented in Zentall (2016), the suboptimal behavior can be explained in terms of evolved heuristics” (p. X), but the phenomena are described at various times throughout his review in terms of more than just heuristics, as both optimal foraging and learning principles are appealed to regularly throughout the article. Even within a given theoretical frame, the discussion is confusing at times. Zentall offers a hypothesis for suboptimal choice that appears to redefine the term contrast and, as discussed next, bears little resemblance to how the term is used in the extensive literature on behavioral contrast. In addition, the concept has received only selective use by Zentall and his colleagues in the past to account for some suboptimal results but not others (e.g., Smith, Bailey, Chow, Beckmann, & Zentall, 2016; Smith & Zentall, 2016; Stagner, Laude, & Zentall, 2012). Thus, it is curious that this explanation is presented in the present review without qualification. The understanding of any phenomenon is enhanced when alternative explanations are presented and their relative strengths and weakness are explored. The integrity of psychological research faces significant challenges (Chambers, 2017) and is better served when the goal is not to argue for a particular point of view but to present a balanced assessment of the evidence as a whole (Johnson, 2013).

Contrast Revisited

Zentall (2019) introduces contrast as a “third factor” in suboptimal choice:

Although the predictive value of the conditioned reinforcer that follows choice of each alternative, independent of its probability of occurrence, appears to predict choice (Smith & Zentall, 2016), there is evidence suggesting that there may be a third factor (Case & Zentall, in press; McDevitt et al., 2016) … positive contrast between the expected value of reinforcement following choice of the suboptimal alternative and the value of the conditioned reinforcer that follows on half of the trials. (p. 2)

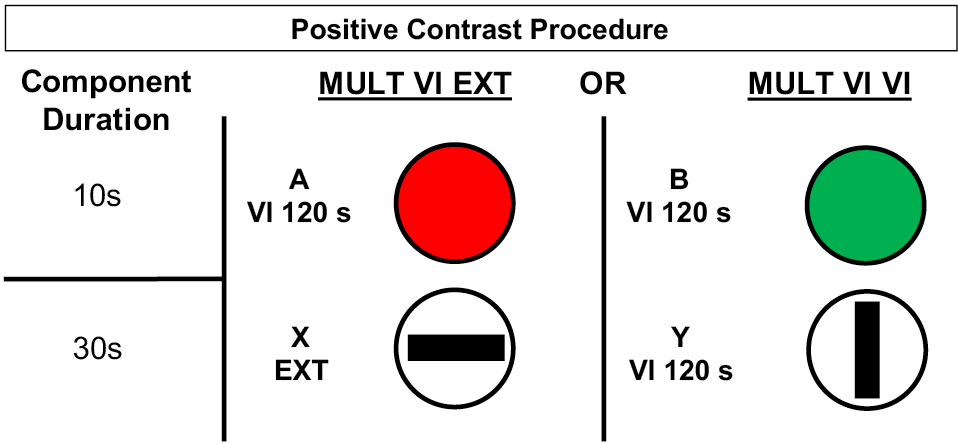

This is not a traditional interpretation of contrast. Within the literature on learning and behavior, the term contrast has been used most often to describe an effect on behavior that occurs when a change in the rate of reinforcement in one component of a multiple schedule produces an opposite change in the rate of response in another component (Reynolds, 1961; Williams, 1983). In a typical demonstration of positive contrast, baseline responding is established on a two-component multiple schedule with equivalent schedules of reinforcement. When the second component is changed to an extinction schedule in a second phase, the rate of responding in the first (target) component increases, demonstrating positive anticipatory contrast.

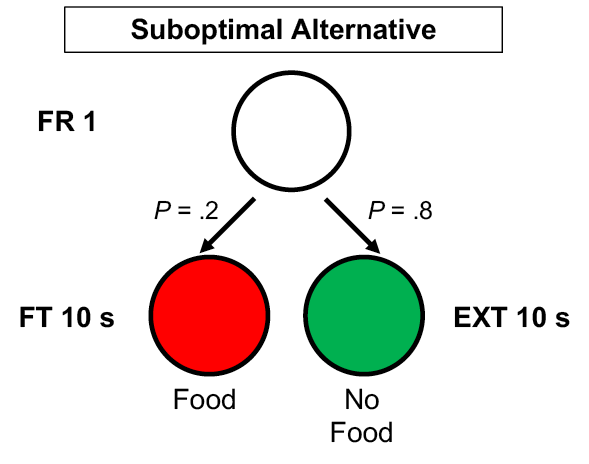

Demonstrations of contrast have typically employed multiple schedules with variable-interval reinforcement schedules, response-independent transitions between components, and unvarying component order. Figure 1 shows the positive contrast procedure used by Williams (1992) in which Component A produced reliably higher rates of responding than Component B because extinction in Component X always followed A. This positive contrast procedure is very different from the suboptimal choice procedure, which typically uses concurrent schedules of reinforcement and probabilistic determination of the following stimulus and schedule. An example of a suboptimal alternative (based on Stagner & Zentall, 2010) is shown in Figure 2. A single peck to an initial-link stimulus leads to one of two terminal-link stimuli. When red is presented, food follows after 10 s, but when green is presented, no food is delivered.

Figure 1. Schematic of positive behavioral contrast procedure used by Williams (1992). Each multiple schedule was assigned to either the left or right response key of a pigeon operant chamber and presented individually in a randomized fashion.

Figure 2. Schematic of the suboptimal choice alternative’s chained schedule, as described in Stagner and Zentall (2010).

Zentall (2019) uses the term contrast to explain the increased choice of a signaled suboptimal alternative. This is a curious use of the term because contrast, in its traditional sense, has been seen as a pattern of behavior and not an explanation of it. That aside, considering the many differences in the two procedures, it is not clear how the same mechanism would operate in both. Previous research has shown that the increase in response rate in the presence of the individually presented stimulus generated by the positive contrast procedure does not reflect increased value. That is to say, when probe trials provided a choice between Components A and B in Figure 1, Component B was reliably preferred despite the higher response rate in the presence of Component A when presented in the multiple schedule (Williams, 1991, 1992). Thus, value and response rate are in opposition, and these two dependent measures cannot be assumed to measure the same thing. Zentall cites the work of Case and Zentall (2018), and they, in turn, cited Williams’s (1983) review of behavioral contrast in their explanation of suboptimal preference, but it is not clear how that work is relevant. Thus, we have the following question about Zentall’s use of the term to explain suboptimal choice: Specifically, in what way is positive behavioral contrast related to suboptimal preference, and what is the evidence that the same mechanism occurs in both procedures?

Contrast as Conditioned Reinforcement?

Another interpretation of Zentall’s use of the term contrast is that it is essentially a repackaging of the conditioned reinforcement explanation offered by Dunn and Spetch (1990; Spetch, Belke, Barnet, Dunn, & Pierce, 1990) that is now formally called the SiGN model (McDevitt et al., 2016).

According to Dunn and Spetch (1990),

A response in the initial link of the 50% chain is followed by either a timeout of 50 s or food delivery in 50 s. In this case, the onset of the terminal-link stimulus correlated with food delivery signals a delay reduction and can be expected to reinforce the initial-link response. (p. 214)

In Zentall’s (2019) terms, “preference for the suboptimal alternative may result from positive contrast between the expected value of reinforcement following choice of the suboptimal alternative and the value of the conditioned reinforcer that follows on half of the trials” (p. 2).

There also appears to be similarity in the analysis of the optimal alternative:

A terminal-link stimulus may function as a conditioned reinforcer only when its onset signals a reduction in delay over that signaled by other stimuli in the local context of that alternative. With FR 1 initial links, onset of the terminal-link stimulus on the 100% alternative should not function as a conditioned reinforcer because it does not signal a reduction in delay over that signaled by the initial-link peck that produced it. (Spetch et al., 1990, p. 220)

Zentall (2019) argues something very similar:

The fact that 50% signaled reinforcement was preferred over 100% reinforcement (Case & Zentall, 2018) suggests that there may also be contrast between the expected value of reinforcement (50% expected) and the obtained value of reinforcement (100% obtained) given choice of the suboptimal alternative, whereas there would be little contrast involving the optimal alternative (100% reinforcement expected and 100% reinforcement obtained). (p. 14)

Zentall continues: “The hypothesis that contrast between what is expected and what is signaled to occur is essentially the same as what McDevitt et al. (2016) refer to as the signal for good news” (p. 14). We agree with this assessment but also note that this explanation dates back to Dunn and Spetch (1990), which leads us to the following question: In what way, if any, is Zentall’s contrast explanation different from the one proposed by Dunn and Spetch? At present the two appear to be indistinguishable. If contrast is in fact “essentially the same,” as the SiGN hypothesis, which explains suboptimal choice in terms of conditioned and primary reinforcement, then we are left to wonder how contrast could constitute a “third factor” of suboptimal choice. Correctly identifying Zentall’s contrast explanation as conditioned reinforcement would aid comparison with other models of conditioned reinforcement neglected by the present review (e.g., Cunningham & Shahan, 2018; Mazur, 1997).

In this commentary we have attempted to fill in some of the gaps in Zentall’s (2019) presentation of suboptimal choice by highlighting earlier work on which it has been based, as well as recognizing some contemporary treatments that show promise in extending our understanding of the mechanisms involved. In addition, we raise significant questions about Zentall’s contrast explanation that require answers if it is to be considered a unique and worthwhile explanation of suboptimal choice.

References

-

Baum, W. M. (2008). Understanding behaviorism: Behavior, culture, and evolution (2nd ed., pp. 59–79). Malden, MA: Wiley-Blackwell.

-

Case, J. P., & Zentall, T. R. (2018). Suboptimal choice in pigeons: Does the predictive value of the conditioned reinforcer alone determine choice? Behavioural Processes, 157, 320–326. doi:10.1016/j.beproc.2018.07.018

-

Chambers, C. (2017). The seven deadly sins of psychology: A manifesto for reforming the culture of scientific practice. Princeton, NJ: Princeton University Press.

-

Cunningham, P. J., & Shahan, T. A. (2018). Suboptimal choice, reward-predictive signals, and temporal information. Journal of Experimental Psychology: Animal Learning and Cognition, 44, 1–22. doi:10.1037/xan0000160

-

Dunn, R., & Spetch, M. L. (1990). Choice with uncertain outcomes: Conditioned reinforcement effects. Journal of the Experimental Analysis of Behavior, 53, 201–218. doi:10.1901/jeab.1990.53-201

-

Fantino, E., Dunn, R., & Meck, W. (1979). Percentage reinforcement and choice. Journal of the Experimental Analysis of Behavior, 32, 335–340. doi:10.1901/jeab.1979.32-335

-

Johnson, J. A. (2013, July 22). Are research psychologists more like detectives or lawyers? Psychology Today. Retrieved from https://www.psychologytoday.com/us/blog/cui-bono

/201307/are-research-psychologists-more-detectives

-or-lawyers-0 -

Kendall, S. B. (1974). Preference for intermittent reinforcement. Journal of the Experimental Analysis of Behavior, 21, 463–473. doi:10.1901/jeab.1974.21-463

-

Mazur, J. E. (1997). Choice, delay, probability, and conditioned reinforcement. Animal Learning & Behaviour, 25, 131–147. doi:10.3758/BF03199051

-

McDevitt, M. A., Dunn, R. M., Spetch, M. L., & Ludvig, E. A. (2016). When good news leads to bad choices. Journal of the Experimental Analysis of Behavior, 105, 23–40. doi:10.1002/jeab.192

-

Reynolds, G. S. (1961). Behavioral contrast. Journal of the Experimental Analysis of Behavior, 4, 57–71. doi:10.1901/jeab.1961.4-57

-

Skinner, B. F. (1938). The behavior of organisms: An experimental analysis. New York: Appleton-Century. Retrieved from Internet Archive website: https://archive.org/details/TheBehaviorOfOrganisms

-

Skinner, B. F. (1953). Science and human behavior. New York: Free Press.

-

Skinner, B. F. (1981). Selection by consequences. Science, 213, 501–504. doi:10.1126/science.7244649

-

Smith, A. P., Bailey, A. R., Chow, J. J., Beckmann, J. S., & Zentall, T. R. (2016). Suboptimal choice in pigeons: Stimulus value predicts choice over frequencies. PLoS ONE, 11(7), 1–18. doi:10.1371/journal.pone.0159336

-

Smith, A. P., & Zentall, T. R. (2016). Suboptimal choice in pigeons: Choice is primarily based on the value of the conditioned reinforcer rather than overall reinforcement rate. Journal of Experimental Psychology: Animal Learning and Cognition, 42, 212–220. doi:10.1037/xan0000092

-

Spetch, M., Belke, T., Barnet, R., Dunn, R., & Pierce, W. (1990). Suboptimal choice in a percentage reinforcement procedure: Effects of signal condition and terminal-link length. Journal of the Experimental Analysis of Behavior, 53, 219–234. doi:10.1901/jeab.1990.53-219.

-

Stagner, J. P., Laude, J. R., & Zentall, T. R. (2012). Pigeons prefer discriminative stimuli independently of the overall probability of reinforcement and of the number of presentations of the conditioned reinforcer. Journal of Experimental Psychology: Animal Behavior Processes, 38, 446–452. doi:10.1037/a0030321

-

Stagner, J. P., & Zentall, T. R. (2010). Suboptimal choice behavior by pigeons. Psychonomic Bulletin & Review, 17, 412–416. doi:10.3758/PBR.17.3.412

-

Thorndike, E. L. (1911). Animal intelligence: Experimental studies. New York: Macmillan. Retrieved from Internet Archive website: https://archive.org/details/animalintelligen00thor

/page/n5 -

Vasconcelos, M., Machado, A., & Pandeirada, J. N. S. (2018). Ultimate explanations and suboptimal choice. Behavioural Processes, 152, 63–72. doi:10.1016/j.beproc.2018.03.023

-

Williams, B. A. (1983). Another look at contrast in multiple schedules. Journal of the Experimental Analysis of Behavior, 39, 345–384. doi:10.1901/jeab.1983.39-345

-

Williams, B. A. (1991). Behavioral contrast and reinforcement value. Animal Learning & Behavior, 19, 337–344. doi:10.3758/BF03197894

-

Williams, B. A. (1992). Inverse relations between preference and contrast. Journal of the Experimental Analysis of Behavior, 58, 303–312. doi:10.1901/jeab.1992.58-303

-

Wilton, R. N., & Clements, R. O. (1971). Observing responses and informative stimuli. Journal of the Experimental Analysis of Behavior, 15, 199–204. doi:10.1901/jeab.1971.15-199

-

Zentall, T. R. (2016). When humans and other animals behave irrationally. Comparative Cognition & Behavior Reviews, 11, 25–48. doi:10.3819/ccbr.2016.110002

-

Zentall, T. R. (2019). What suboptimal choice tells us about the control of behavior. Comparative Cognition and Behavior Reviews, 14, 1–17. doi:10.3819/CCBR.2019.140001

Figure 1. Commitment response procedure for the ephemeral reward task.

An interpretation of the ephemeral reward task that does not rely on the concept of impulsivity starts with Zentall’s differential reinforcement hypothesis just mentioned and then adds to it the idea that pigeons and rats may fail to associate G with the second reward because of interference by the intervening events, including the first reward and pecking the R key. At this light, the 20-s delay is effective because it increases the exposure to G, strengthens its memory trace, and reduces the interference effects; the association of G with the second reward is facilitated. To test this hypothesis, one could increase the duration of R following the first reward and see if, as this duration increased, preference for G decreased.

The Midsession Reversal Task

Given two stimuli (S1 and S2), choices of S1 are reinforced during the first 40 trials and choices of S2 during the last 40 trials. At the steady state, pigeons show anticipatory and perseverative errors, which are consistent with timing the moment of reversal from the beginning of the session. Why the pigeons resort to timing rather than use local cues (e.g., win-stay/lose-shift strategy), is the surprising outcome.

Zentall’s first account of these results was that the pigeons have difficulty remembering their previous choices and outcome, both when the task requires a visual (i.e., S1 = red key, S2 = green key) or a spatial (i.e., S1 = left key, S2 = right key) discrimination with a relatively long inter-trial interval ITI (ITI; e.g., greater than 1.5 s; Laude, Stagner, Rayburn-Reeves, & Zentall, 2014; Rayburn-Reeves, Laude, & Zentall, 2013; Rayburn-Reeves, Molet, & Zentall, 2011). Given the alleged difficulty remembering the trial events, the chosen stimulus, and the received outcome, pigeons use the time from the beginning of the session as a cue to switch from S1 to S2.

The hypothesis of memory limitations is more plausible for visual than spatial discriminations, for if Williams (1972) found that pigeons do not learn a win-stay/lose-shift strategy when a color discrimination is required, Shimp (1976) found that pigeons learn this strategy with a spatial discrimination, even when the ITI lasts 6 s. Moreover, focusing on the pigeons’ memory difficulties may well explain the relatively small number of anticipatory and perseverative errors around the reversal moment, but only at the cost of failing to explain the large number of correct choices on the remaining trials! That is, we should not forget the obvious fact that on the vast majority of trials, pigeons perform correctly.

Furthermore, timing requires memory. To wit, timing models such as the scalar expectancy theory (Gibbon, 1977, 1991) or the learning-to-time model (Machado, 1997; Machado, Malheiro, & Erlhagen, 2009), for example, include memory components—memory bins that store reinforcement times in scalar expectancy theory, and variable-strength associative links connecting behavioral states to operant responses, in the learning-to-time model. Any account that substitutes timing for memory to explain the MSR task results may shift the focus from local cues (responses and their outcomes) to a global cue (time), but it cannot do without the learning of response-outcome relations.

Zentall’s second account argues that pigeons use timing due to the symmetry and excessive number of reliable cues in the MSR task. The cues for reinforcement, in particular, may compete with one another in complex ways, both before and after the reversal:

Why the pigeons use the time from the start of the trial [sic] to the reversal rather than the feedback from their choices is not because of the absence of reliable cues but presumably because of the symmetry or excess of reliable cues. . . . As the pigeon approaches the midpoint of the session, the current cues may indicate that S1 is the correct response; however, anticipation of responding to S2 competes with the correct S1 response. Similarly, after the reversal, memory of the correct S1 response competes with the current feedback from the correct S2 response. (Zentall, 2019, p. 12)

Timing in the MSR task is no longer justified by memory limitations but by cue competition. However, Zentall’s cue-competition account needs to be further elaborated, preferably under the form of a dynamic model to accommodate the following findings. In the standard MSR task, when the ITI doubles, pigeons start to choose S2 around the first quarter of the session, which corresponds approximately to the time of the original reversal (McMillan & Roberts, 2012; Smith, Beckmann, & Zentall, 2017). This result supports the idea that time from the beginning of the session is indeed the main cue for switching from S1 to S2, and it is compatible with the cue-competition hypothesis. But when the ITI’s duration is halved, pigeons switch shortly after the reversal, not at the end of the session, which would be the time corresponding to the original reversal (McMillan & Roberts, 2012; unpublished data from our lab also confirms this effect). This result suggests that time is not the only operative cue, that pigeons remain sensitive to the outcome of their choices (e.g., extinction following several S1 choices), remember the response-outcome pairing of the previous trial, and adapt their behavior to the current contingencies when time no longer predicts reinforcement. In any case, how time and local cues combine to cue behavior remains unclear.

In addition, according to Zentall’s cue-competition hypothesis, when there are fewer cues available and S1 is the only reliable source of information (i.e., S1 = 100% and S2 = 20% reinforcement), pigeons adhere to the feedback provided by S1. They do not incur anticipatory errors and reduce perseverative errors significantly. Although Zentall does not elaborate the case, the cue-competition hypothesis seems to predict that when S2 is the only reliable source of information (S1 = 20%, S2 = 100% reinforcement), by virtue of the asymmetry and reduction of the number of reliable cues, overall performance should also improve. The data suggest otherwise, though. Pigeons make a large number of anticipatory errors and overall performance worsens (Santos, Soares, Vasconcelos, & Machado, in press).

Unfortunately, Zentall did not extend his account to the case of S1 = 20% and S2 = 100% reinforcement. Reasoning by analogy yields the following: When the only reliable source of information is S2, pigeons adhere to the feedback of S2, that is, they continue to peck S2 after reinforcement and switch to S1 after nonreinforcement. But feedback from S2 can be obtained only by pecking S2. Hence, Zentall’s account seems to predict anticipation errors. However, the account does not predict the proportion of these errors, nor does it explain why errors remain relatively rare during the very first trials.

An alternative explanation is that the difference in reinforcement probabilities between S1 (20%) and S2 (100%) biases the pigeons’ time estimate of the reversal moment. But in Zentall’s cue-competition account, pigeons would not need to time when there is only one reliable cue. More generally, it remains to be explained why making S1 or S2 the only reliable sources of information has such asymmetrical effects on performance (Santos et al., in press). In the absence of a quantitative dynamic model, cue competition remains so vague that its empirical test is virtually impossible.

Part 3. Beyond the Heuristic Value of Heuristics

After analyzing the various cases of suboptimal choice, Zentall concludes that “suboptimal behavior can be explained in terms of evolved heuristics that work reasonably well in nature but sometimes fail under laboratory conditions” (p. 14). This conclusion may be premature, because the evidence for the specific heuristics is conspicuously missing. With respect to the less-is-better effect, Zentall does not show that, in their natural settings, animals choose based on the average quality of each option and not on the quantity of each option or, more likely, on both quality and quantity. With respect to the ephemeral reward task, no specific, well-defined heuristic was proposed. With respect to the MSR task, Zentall also does not show that in natural settings animals rely on timing when local cues are ambiguous, excessive, or symmetric.

Suboptimal choice behavior seems to demand heuristics. But some cases of apparent suboptimal choice may turn out to be cases of optimal choice when we unravel a hitherto unknown biological function; for example, aversion to fragmented food may prevent the animal from eating discarded food. Other cases of suboptimal behavior may simply denounce the shortcomings of our current theories, for example, at identifying the functional choice set or the operative constraints in a learning situation. Zentall himself provides an example when he discusses self-control choices that seem irrational until we consider the animal’s time horizon — the high-probability amount did not yield enough nutrients to keep the animal alive for the night. Once the researchers unraveled this critical variable, the suboptimal tag was abandoned, and with it the reason for the heuristic.

Reading the various accounts of suboptimal choice that Zentall proposes—all revealing their author’s ingenuity and creativity—one cannot help but think that without conceptual clarity regarding definition and use, and without either a plausibility argument or a modicum of supporting evidence, heuristics remain “just so stories.” But even when we define heuristics clearly and provide either a strong plausibility argument or empirical evidence supporting them, we still need to coordinate heuristics with known behavioral processes. Take the timing heuristic inspired by the MSR task results. Discriminative stimuli interact in different ways: They may have additive or subtractive effects on behavior, for example. They may even cancel each other, as when one stimulus cues the animal to move forward and another stimulus cues the animal to move backward. In this case, a third stimulus may determine the animal’s behavior (e.g., a displacement activity). Stimulus interactions are behavioral processes, or part of behavioral processes, but the operation of a behavioral process is not a heuristic. And if we say that the heuristic consists of using a global cue (e.g., time) when local cues (responses and their outcomes) are excessive or unreliable, we still need to identify the basic mechanisms that implement the heuristic—that detect when local cues are excessive or unreliable, for example.

To conclude, what indeed does suboptimal choice tell us about the control of behavior? Like any other set of surprising findings, suboptimal choice tells us that we do not understand the phenomenon of choice—our theories of choice are either wrong or incomplete. Furthermore, each specific instance of suboptimal choice tells us that we need to conduct more studies to characterize the phenomenon and identify its boundary conditions. For example, we do not know whether the less-is-better effect extends to mixtures of three or more differently valued items, or whether the ephemeral reward task effect occurs when the two options remain spatially different (e.g., a concurrent chain schedule with two white keys as initial links, one terminal link consisting of a 2-s red light followed by food, and the other terminal link consisting of a 2-s green light followed by food and then a 2-s red light also followed by food).

We also need to fathom new hypotheses about the behavioral processes of suboptimal choice, clarify, and test them. To that end, knowledge of an animal’s phylogenetic and ontogenetic histories and of how the animal adapts to its natural environment is likely to be crucial. But this knowledge may be as crucial as it is hard to obtain; most of our statements about phylogenetic history or reinforcement history qualify more as ad hoc speculation than knowledge. As for heuristics, to move them from the domain of private science where “anything goes” to the domain of public science where conceptual coherence and empirical sensitivity rule, we need to define them clearly, identify the conditions that activate them, and coordinate them with currently known behavioral processes. Otherwise, heuristics will multiply and range widely yet may move us little further forward.

To make matters more confusing, Zentall advances in the Discussion section yet another hypothesis: Option G includes two rewards, delivered d1 and d2 seconds after choice; option R includes only one reward delivered d1 seconds after choice. If the value of an option equals the average of the values of its rewards, and if the value of a reward decays with delay, then G will have less value than R. The hypothesis assumes that, regardless of impulsivity, the animal learned to associate the choice of G with the second reward!

References

-

Beran, M., Evans, T., & Ratliff, C. (2009). Perception of food amounts by chimpanzees (Pan troglodytes): The role of magnitude, contiguity, and wholeness. Journal of Experimental Psychology: Animal Behavior Processes, 35, 516–524. doi:10.1037/a0015488

-

Beran, M., Ratliff, C., & Evans, T. (2009). Natural choice in chimpanzees (Pan troglodytes): Perceptual and temporal effects on selective value. Learning and Motivation, 40, 186–196. doi:10.1016/j.lmot.2008.11.002

-

Breland, K., & Breland, M. (1961). The misbehavior of organisms. American Psychologist, 16, 681–684. doi:10.1037/h0040090

-

Chase, R., & George, D. (2018). More evidence that less is better: Sub-optimal choice in dogs. Learning & Behavior, 46, 462–471. doi:10.3758/s13420-018-0326-1

-

Gibbon, J. (1977). Scalar expectancy theory and Weber’s law in animal timing. Psychological Review, 84, 279–325. doi:10.1037/0033-295X.84.3.279

-

Gibbon, J. (1991). Origins of scalar timing. Learning and Motivation, 22, 3–38. doi:10.1016/0023-9690(91)90015-Z

-

Kralik, J., Xu, E., Knight, E., Khan, S., & Levine, W. (2012). When less is more: Evolutionary origins of the affect heuristic. PLoS ONE, 7, e46240. doi:10.1371/journal.pone.0046240

-

Laude, J. R., Stagner, J. P., Rayburn-Reeves, R., & Zentall, T. R. (2014). Midsession reversals with pigeons: Visual versus spatial discriminations and the intertrial interval. Learning & Behavior, 42, 40–46. doi:10.3758/s13420-013-0122-x

-

Machado, A. (1997). Learning the temporal dynamics of behavior. Psychological Review, 104, 241–265. doi:10.1037/0033-295X.104.2.241

-

Machado, A., Malheiro, M., & Erlhagen, W. (2009). Learning to time: A perspective. Journal of the Experimental Analysis of Behavior, 92, 423–458. doi:10.1901/jeab.2009.92-423

-

McMillan, N., & Roberts, W. (2012). Pigeons make errors as a result of interval timing in a visual, but not a visual-spatial, midsession reversal task. Journal of Experimental Psychology: Animal Behavior Processes, 38, 440–445. doi:10.1037/a0030192

-

Mowrer, O. H. (1947). On the dual nature of learning—A reinterpretation of “conditioning” and “problem solving.” Harvard Educational Review, 17, 102–148.

-

Pattison, K., & Zentall, T. R. (2014). Suboptimal choice by dogs: When less is better than more. Animal Cognition, 17, 1019–1022. doi:10.1007/s10071-014-0735-2

-

Rayburn-Reeves, R. M., Laude, J. R., & Zentall, T. R. (2013). Pigeons show near-optimal with-stay/lose-shift performance on a simultaneous-discrimination, midsession reversal task with short intertrial intervals. Behavioural Processes, 92, 65–70. doi:10.1016/j.beproc.2012.10.011

-

Rayburn-Reeves, R. M., Molet, M., & Zentall, T. R. (2011). Simultaneous discrimination reversal learning in pigeons and humans: Anticipatory and perseverative errors. Learning & Behavior, 39, 125–137. doi:10.3758/s13420-010-0011-5

-

Sánchez-Amaro, A., Peretó, M., & Call, J. (2016). Differences in between-reinforcer value modulate the selective-value effect in great-apes (Pan troglodytes, P. paniscus, Gorilla gorilla, Pongo abelii). Journal of Comparative Psychology, 130, 1–12. doi:10.1037/com0000014

-

Santos, C., Soares, C., Vasconcelos, M., & Machado, A. (in press). The effect of reinforcement probability on time discrimination in the midsession reversal task. Journal of the Experimental Analysis of Behavior.

-

Shimp, C. (1976). Short-term memory in the pigeon: The previously reinforced response. Journal of the Experimental Analysis of Behavior, 26, 487–493. doi:10.1901/jeab.1976.26-487

-

Silberberg, A., Widholm, J., Bresler, D., Fujita, K., & Anderson, J. (1998). Natural choice in nonhuman primates. Journal of Experimental Psychology: Animal Behavior Processes, 24, 215–228. doi:10.1037/0097-7403.24.2.215

-

Smith, A., Beckmann, J., & Zentall, T. R. (2017). Mechanisms of midsession reversal accuracy: memory for preceding events and timing. Journal of Experimental Psychology: Animal Learning and Cognition, 43, 62–71. doi:10.1037/xan0000124

-

Solomon, R. L., & Wynne, L. C. (1954). Traumatic avoidance learning: The principles of anxiety conservation and partial irreversibility. Psychological Review, 61, 353–385. doi:10.1037/h0054540

-

Williams, B. (1972). Probability learning as a function of momentary reinforcement probability. Journal of the Experimental Analysis of Behavior, 17, 363–368. doi:10.1901/jeab.1972.17-363

-

Zentall, T. R. (2019). What suboptimal choice tells us about the control of behavior. Comparative Cognition & Behavior Reviews, 14, 1–17. doi:10.3819/CCBR.2019.140001

-

Zentall, T. R., Laude, J., Case, J., & Daniels, C. (2014). Less means more for pigeons but not always. Psychonomic Bulletin & Review, 21, 1623–1628. doi:10.3758/s13423-014-0626-1